Optimizing Parallel UI Testing with Humans and Machines

Releasing software is often a sequence of repetitive activities, including critical quality gates to assure the right version, configuration, and scope are deployable and releasable. In those critical moments, we want our release quality and risks to be predictable against expected outcomes and behaviors – we want to mitigate any potential risks associated with new changes. For this, we need to pick a parallel testing strategy and tactics that are tightly integrated with our processes to enable informed decision-making based on feedback loops. Testing reduces the guesswork and enables us to action data generated from quality processes. In this blog, we are not focusing on the quality of signals, their context, and how to interpret them to make informed decisions. Instead, we are focusing on the testing configuration strategy, process, and how to interpret process characteristics to build an optimal parallelisation strategy and keep our development teams modern, agile, and more capable.

The Importance of an Optimal UI Testing Strategy

As part of our core development flow we do have multiple quality gates (not just one in the end) to ensure risks are mitigated, while each gate has potentially different configurations depending on the complexity and scope of the software update. Collectively, these gates define how well an engineering team can optimize across speed, quality, cost. How quickly and often can we release quality increments? Will this take us a week, day, hours, minutes? Is our software modular? Is our testing modular? Are we time-efficient and optimal? Are we scalable?

Sooner or later, each engineering team will face shortcomings in testing strategy and tactics based on agreed quality gate criteria and requirements, which also tend to change and evolve over days and weeks. Let’s assume that our software is used by 1 million global users daily. Our user base characteristics will dictate various testing requirements to ensure safe releases. Our requirements may include several device/OS combinations, browsers, locations, languages, internet speed variations, account subscription types (if applicable), and more, depending on the industry you’re in. This complexity is why we need optimal testing strategies and tactics to accommodate such a wide set of needs while optimizing our underlying process to carry this out in a repeatable and frequent manner.

It is empirical to have a process vision and KPI goals to measure our development flow time and determine if we are making progress by improving it (or if it is getting slower). Applying all the testing requirements to our UI testing gate itself can have a significant total process time. Picking the right strategy can hugely impact performance and define how we operate as an organization and team.

Let us define a sample strategy – with every new feature to our core and critical business flow, we need to:

- Cover 80% device-OS combinations of our users > This is good enough coverage to build confidence that anything we find here most likely applies to the 20%, too. We will have a blind spot, but historical data shows this is rare.

- 100% all paid subscription types > We cannot afford to lose paid subscribers

- All checkout flow payment combinations> We cannot afford to impact top-line revenue

- At least 5 regions > We want to eliminate and rule out location-based issues

With this strategy, we need to test several combinations and loop test scenarios based on subscriptions or payment types. A single person testing all possible scenarios would need a lot of time per device, and all of this would be repeated per device-os combination + region. A common full scope could be presented like this:

Having 5 devices and 5 regions, for example, means that the full scope is tested 25 times in total. As this is done by 1 person, it is all sequential process time:

This process will be quite expensive to execute very often, as it is time consuming, heavily affects process turnaround speed (literally blocks deployment and release decisions), and puts stress on the quality pyramid as we are growing scope and cost without wanting to sacrifice quality. Assume each loop is 1h, then this is a whopping 25h process time – imagine you are ready to release and need to wait 25 business hours (~3 business days if done by one person). Our default behavior would be to do monthly or quarterly full-scope testing, let alone if more combinations needed to be added. We may start to trade off test scopes and become more risk-averse.

Predictable process time is as important as predictable quality itself. It enables us to make informed decisions. Imagine being in a position needing to decide if certain scopes or configurations need to be tested if we have a critical hotfix ready to go out, but we don’t know how long it takes to test. Time is our enemy in this situation, and we need to make fast decisions. Do we test all scenarios or skip some? How long will it take based on choosing between options? By when do we know if this hotfix is safe? This is even more important when shipping binaries that users have to install (it’s hard to revert your decision). You do not have the luxury to trial-error constantly as with a web application. Submitting hotfixes to an app store is not convenient. It takes time to publish apps. Asking users to uninstall or download a new binary is not pleasant if auto-update fails or is not possible. An optimal parallel test strategy can help alleviate some of the emotions in those moments and show the maturity of the team and of the organization.

What About Humans and Machines in the Mix?

Our title referenced humans and machines working together. However, I haven’t added this into the mix until now since there is often a concept that machines are faster just because (and they are due to various reasons). However, unless your team can execute tests in a modular and easily configurable way, incorporating machines won’t change the speed – you’d still be blocked by sequential processes and most likely impacted by your own application and infrastructure performance capabilities.

The best way to think and build strategy and logic is to apply it to humans and machines; the actor should not matter much. We want to have debuggability and fallback potential by humans, with machines doing most of the heavy lifting for repetitive tasks. For example, we could leverage deep linking tactics. We can guide humans and machines with deep links to the right spot as an entry point, that is limited to a specific scenario. This is a common tactic and approach to modularize testing.

As another example, https://developer.android.com/training/app-links/deep-linking offers guidelines for developers on how to enable it. Both humans and machines can execute modular test scenarios, and we can again divide and conquer our test coverage at great turnaround speeds and use right actors when needed.

How to Optimize and Shift Towards Parallelization?

Let us put this into perspective and context of engineering organization and capabilities.

Example: Strategies to Optimize Manual Testing With a Team of 5

When I worked as an Engineering Manager for a small team of 5, one of our core rituals was to follow our agreed testing plan, configuration, and coverage requirements based on a given scope change classification. Quality gate was something we couldn’t afford to miss, but it was quite an expensive process to execute. At first, we tested manually, all hands on deck. This enabled us to do concurrent testing as a team. Our strategy? Divide and conquer combinations and scopes – we did not repeat scopes across devices. Data showed if we found an issue in one device we also encountered it in other places. This was good enough for us as we did not have payment flows in the mix (highly critical).

The goal was to eliminate process overhead and focus on predictability and the likelihood that scope+device are loosely coupled. Based on the data, we did not see a correlation. We were confident in using a highly split and modular testing strategy to maximize device coverage without sacrificing process speed. As we were a team of 5, the only way for us to speed up our manual testing process would have been to cut scope and start automating testing. Both options are viable as not all scopes are equal or to be kept forever, and automation is the expected advancement.

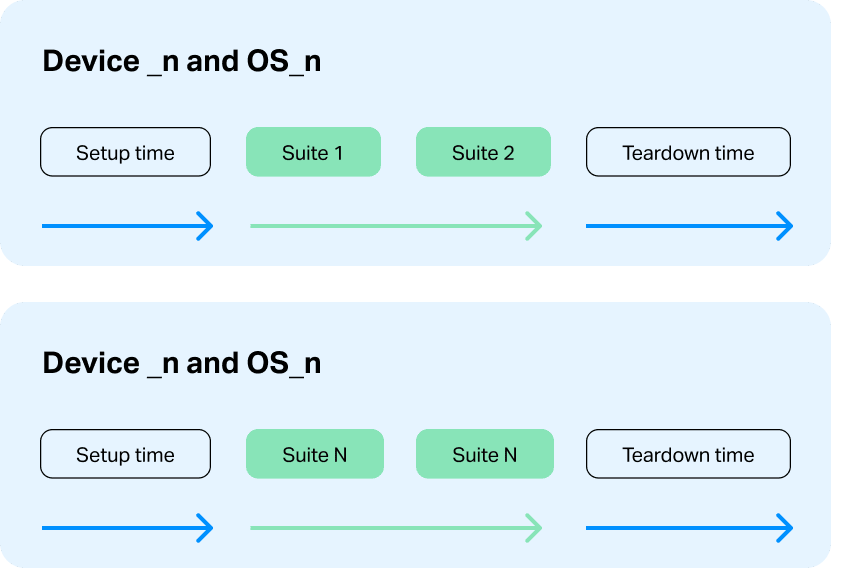

At first, we built a strategy in which device-os-region is the core execution unit, and each unit can be executed in parallel. Each team member was assigned a unit within each testing time. For fresh eyes, we also rotated the unit among team members. This enabled us to build simple process parallel units that we executed repeatedly.

In this strategy, we are still using full scope per device-os-region combination, and we cannot achieve further optimization on this front. Our options are to divide the scope further, change scope variations and execution frequency, automate, or retire some tests. There are many viable options to explore further. As the first step, we focused on process configuration for speed optimization based on testing units and executors.

Before

After

We have now reached the first optimal testing strategy that fits a team of 5, as each has a single test execution device-os-region and divided scope. However, we only scale horizontally, and we can no longer gain from parallelization directly unless test packages can be combined. In this strategy optimization, we try to optimize the parallel speed and combine tests so that they are close by +/-10% in total process time to be predictable again.

The above samples could be optimized even further if our test configuration and modularity allow us to achieve predictable quality gate speed. However, in this example, we are blocked by team size. Test automation or extending the team could help us achieve this higher optimization level.

The next challenges the team will be exposed to now that we have an optimal strategy in place are:

- Test configuration conflicts

- Test data and session tampering

- Process regression – each new feature adds tests to our test package. As a result our testing scopes per parallel keep scaling horizontally and increasing process time.

- Testing scopes need hygiene – testing efficacy is important. We cannot keep adding test cases, new parallels, or new sequential steps. We need a strategy and decision framework to change testing frequency, package rotations, or retire test cases with low impact and value to improve execution time and reduce overhead parallels and maintenance costs.

- Complexity – parallels increase combinations and complexities. Hence, optimal scopes are important to find the right level of parallelism against a tolerable process time.

Augment the Team

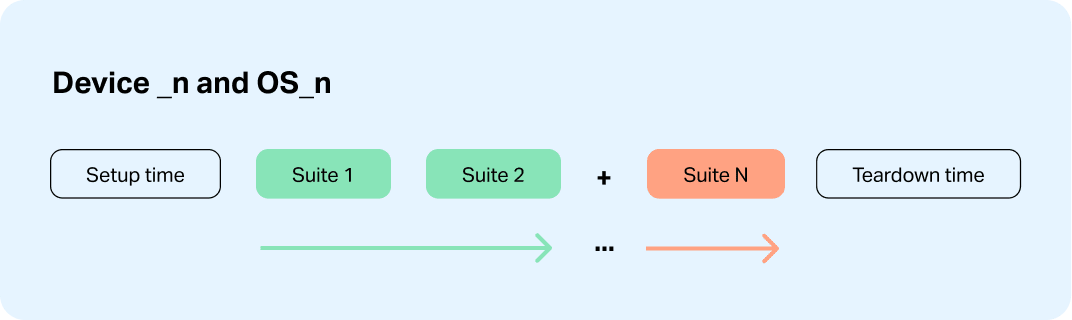

Thinking strategically about the testing process not only helps the team to test by themselves but also enables effective and efficient integration of partners like Testlio. This approach allows you to extend the team with a highly scalable human testing grid or automate with Testlio’s QEs and execute within our infrastructure or integrate your existing solution. It increases debugging efficiency by using human testing efficiently. For example, with Testlio, the parallelization configuration is highly scalable, a benefit that cannot be achieved internally with a team of 5. Integrating Testlio’s manual testers as the parallelization strategy offers great cost efficiency and economics. We can easily achieve 100 or 200 parallel executions for a strategy like this:

The challenge will be determining whether your staging or QA environment can support this configuration, both for humans and machines.

Over-Optimization Risk

What is important here is the potential for over-optimization, as the setup and teardown are 100% observed. These risks can easily outweigh the testing time and indicate process waste introduced by setup and teardown.

We could potentially use a value-added process calculation here:

Process Efficiency % = Value added time (testing)Total process time *100

Consider that the minimum target is 25% or greater. But the sweet spot is at least 50% or greater. Here, we are balancing process efficiency versus process time. In general, apply a strategy based on your organization’s requirements and vision.

Optimizing Parallel Testing for Better Digital Experiences

In the pursuit of releasing software that meets the expectations of both businesses and their users, the challenge of optimizing parallel UI testing for human and machine testers is paramount. Leaders must navigate the intricate landscape of testing strategies, focusing on the need for a well-defined, modular approach that can accommodate the diverse requirements of software applications today. By emphasizing the importance of device-OS-region parallelism, subscription type coverage, payment flow testing, and regional considerations, this approach offers a flexible yet structured testing framework.

However, as we embrace these strategies, the caution against over-optimization is a critical reminder. The goal is to achieve a balance where the process efficiency does not overshadow the core objective of testing, which is identifying and mitigating potential user experience issues and business risks associated with them. This is where balancing the right mix of humans and machines is crucial for maintaining a sustainable and effective testing regimen that supports continuous improvement and adapts to new challenges. As we navigate the complexities of software development and testing, our focus must remain on delivering products that not only meet but exceed user expectations, thereby affirming the value of thorough and thoughtful testing processes in the digital age.