AI in Software Testing: Actionable Advice for 2025

As software systems get increasingly complex every day, the challenges of effective testing also escalate. Software testing involves handling large datasets, complex workflows, and shorter release cycles.

Today, artificial intelligence (AI) has emerged as a powerful force, offering dynamic and intelligent solutions. For example, for automation tests, the first step is mostly login. A slight change in the login user interface (UI) can cause hundreds of tests to break.

In traditional software testing, this problem might delay releases and make automation tests unusable. But with advanced AI, self-healing tests can be written.

An AI-based UI automation framework intelligently identifies changes in the application’s DOM and updates the UI selectors accordingly. This will save the automation team considerable time, eliminating the need to manually update selectors across the entire suite.

AI has numerous applications in software testing, such as creating and executing test cases, generating edge cases, analyzing results and defects, maintaining existing test suites, and more. Using it can help testers free up their time from redundant and laborious tasks, allowing them to focus on more productive tasks, such as usability evaluation, exploratory testing, and risk analysis.

In this article, we discuss the emergence of AI in software testing, its use cases, and how to incorporate it in existing testing processes and frameworks.

TL;DR

- As software systems become more complex, AI tools help in dealing with challenges.

- AI-powered tests save a considerable amount of time in testing by identifying issues early on.

- The automation of mundane tasks allows testers to focus on strategic activities such as exploratory testing and risk evaluation.

Let’s dive right into it!

Table of Contents

- The State of Software Testing Today

- The Emergence of AI & Its Impact on Software Testing

- AI-Human Collaboration: How Does it Look?

- Responsible AI: A Mechanism for Ensuring Software Quality

- Real World Use Cases

- Embracing AI as Part of Software Testing

- Actionable Advice for QA Leaders and Organizations

The State of Software Testing Today

Before discussing the current state of software testing, it is essential to understand the pre-AI era of software testing. In the absence of AI, manual testing was an extremely lengthy and time-consuming process. Regression testing could take up to weeks since all the test cases had to be executed manually. Similarly, the test documentation, as well as the maintenance of automation test suites, was entirely manual.

However, with advancements in the field of artificial intelligence, software development and testing have become increasingly fast-paced. Traditional testing processes naturally struggle to keep up with that pace. This can lead to a drastic reduction in testing efficiency.

For example, the use of AI technologies has led to monthly releases becoming weekly releases. It is not possible to cover one month’s worth of regression testing within a single week. This is where AI comes into play for Software testing.

Using multi-agent systems is now the norm in the software industry. It is a concept where multiple autonomous AI agents work together to complete complex tasks. These systems can be used in software testing to perform various testing tasks.

For example, a team can have a multi-agent setup where one agent is assigned to maintain JIRA tickets, another agent works on test case documentation, and a third agent manages the maintenance of the automation test suite.

The above example shows how using AI effectively can lead to an exponential increase in productivity. We will be discussing this concept in depth in the upcoming sections.

The Emergence of AI & Its Impact on Software Testing

AI is significantly impacting all parts of the software development lifecycle (SDLC). In the early phases of the software development lifecycle, product teams use AI to enhance their research and analysis during the requirements gathering phase.

During the development phase, developers use coding assistants like Copilot to write and review their code. Likewise, for deployments and releases, DevOps engineers use AI to automate processes, such as deployments and monitoring.

Therefore, it is only natural to integrate AI in software testing as well. AI is helpful in the test case generation process, as it can analyze user stories and requirements to generate comprehensive test cases. Similarly, AI is also beneficial for performing exploratory testing and identifying niche edge cases, which a human tester might overlook.

AI can also be utilized for detailed pixel-by-pixel comparisons of web pages and mock designs to identify UI design issues. Moreover, it plays a key role in redefining software test automation, which we discuss below.

AI in test management

As test suites grow and release cycles speed up, it gets harder to decide what to test, when, and how often. AI can help by analyzing past runs, defect patterns, and recent code changes to highlight high-risk areas and prioritize the tests that matter most.

It also supports test case design and refactoring. AI can generate new tests from user stories, identify duplicates, and recommend which ones to update or remove based on how often they catch issues or provide meaningful coverage.

AI in software test automation

AI improves the quality of software test automation by making it more innovative, dynamic, and resilient. It does this by analyzing large volumes of test data to identify patterns, predict failures, and generate smart test scripts that adapt to changes in the application.

The biggest limitation of traditional test automation is that the code is written and maintained statically. This means that if there is a change in a UI selector, that selector will have to be manually updated throughout the entire suite.

This is the most prominent blind spot that using AI can help to fix. AI makes the code more responsive, i.e., it automatically detects changes and adjusts accordingly. This is done through some mechanisms such as:

- Self-healing selectors: AI automatically detects when a selector changes on the UI, and it updates the values across the entire suite.

- Coding assistants: AI coding assistants help UI automation engineers write maintainable and high-quality code.

- Predictive analysis: It is essential to analyze existing test reports to identify patterns and trends. AI systems can go through previous reports and predict where future bugs are likely to occur.

- Recognizing flaky tests: Often, test failures don’t occur due to an actual bug; instead, they’re due to a minor code issue or a loading timeout. AI can identify such flaky test cases and help improve them.

Consider the example of a UI automation team that uses an AI plugin for their Cypress codebase for tracking test flakiness. It automatically updates UI selectors. Moreover, it also recommends skipping specific tests based on an impact analysis after the UI change. Such simple inputs can reduce regression testing time by eliminating unnecessary tests.

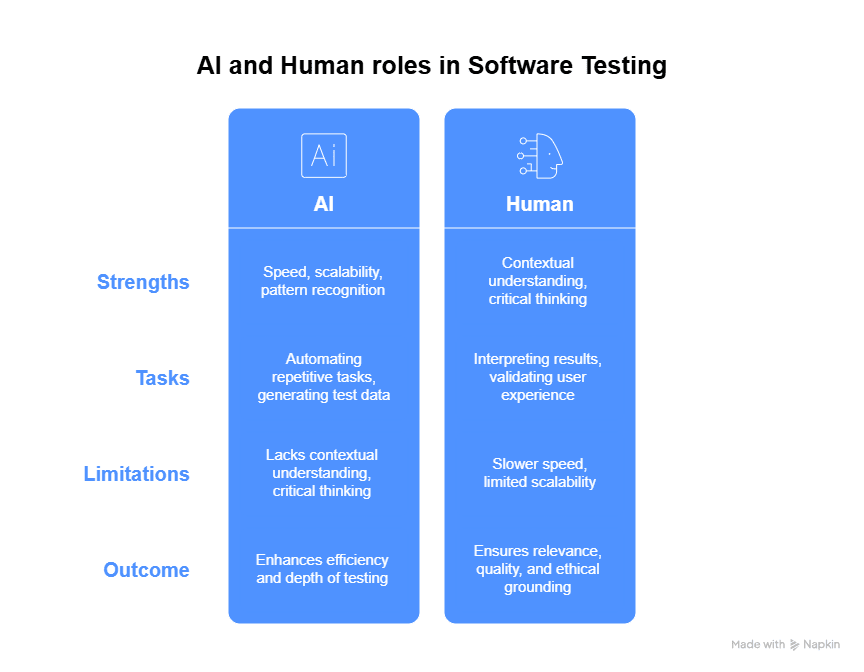

AI-Human Collaboration: How Does it Look?

As newer versions of softwares are released, quality assurance (QA) teams need to keep their test automation suites up to date as well. Imagine that CucumberJS releases a new version, and the team needs to make their existing test suite compatible with it. Instead of going through the entire documentation, the team can feed it to an AI agent to extract the necessary details using natural language processing (NLP).

This is a perfect example of using an AI agent trained on cucumber.js documentation to answer questions, describe new features, and even offer updated code snippets based on the latest changes.

This is an ideal case of AI-human collaboration where humans remain in command, but AI speeds up cognition and application.

In addition, AI assists in the following manner:

- Custom test documentation: AI can create test documentation specific to your project, transforming test cases and code into understandable, well-structured documents through natural language processing.

- Smart code suggestions: In the context of recent code changes, AI can propose optimized or refreshed code snippets. This minimizes trial-and-error efforts and reduces long code review cycles.

- Bug triage and prioritization: AI reviews patterns of previous bugs and test outcomes, helping teams prioritize the most severe issues first. AI can identify areas of the code that are not well-tested and suggest additional test cases to improve coverage.

- Knowledge sharing: New team members can converse with an AI agent to become familiar with frameworks, test strategies, or tool usage in just a matter of minutes. These agents have onboarding documents ingested in them and can answer any product or process-related query.

- Faster root cause analysis: AI can cross-reference log data, errors, and system metrics to recommend the probable root cause of test failures. This saves hours of debugging.

This type of collaboration doesn’t eliminate testers; rather, it assists them and allows them to spend more time on critical thinking and less time on manual tasks.

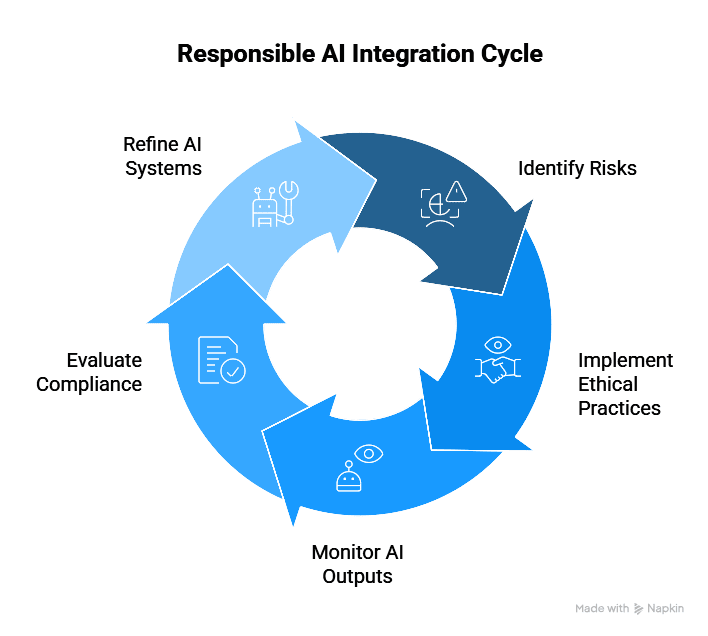

Responsible AI: A Mechanism for Ensuring Software Quality

Integrating AI into existing applications offers advantages, but it is crucial to remain aware of ethical boundaries and responsibilities. ‘Responsible AI’ is a concept that addresses these challenges. It is a mechanism for incorporating ethical practices when using AI tools in existing workflows and technologies.

The principles of responsible AI focus on identifying risks, such as bias, non-compliance with regulations, and harmful content in the generated output.

The following are the key focus areas to ensure responsible AI:

- Bias and fairness: It is necessary to ensure that AI outputs do not discriminate against certain user groups. This can be done by training the AI models on a large dataset of diverse user groups.

- Compliance with regulations: AI models should comply with data usage and privacy laws such as GDPR (General Data Protection Regulation). Moreover, they should be accessible, meaning people with disabilities can also use them, under regulations such as the Web Content Accessibility Guidelines (WCAG).

- Detection of harmful content: Establish necessary mechanisms such as content filters, moderation layers, and flagging systems to block any offensive or dangerous content generated by the model. The AI models should be thoroughly trained on diverse datasets to identify and prevent this, especially if they are used in customer-facing roles.

- Implement quality gates throughout the entire lifecycle: This means ensuring that AI is used responsibly during all phases of the lifecycle. This includes the early planning phases as well as the development and testing cycles. It is the testing team’s responsibility to add checkpoints at these stages of the cycle.

Consider an e-commerce application that uses an AI chatbot to respond to user queries. If users submit complaints about a biased attitude from the bot toward specific demographics, it will be highly harmful to the company’s reputation. Therefore, it is necessary to implement responsible AI practices when developing such products.

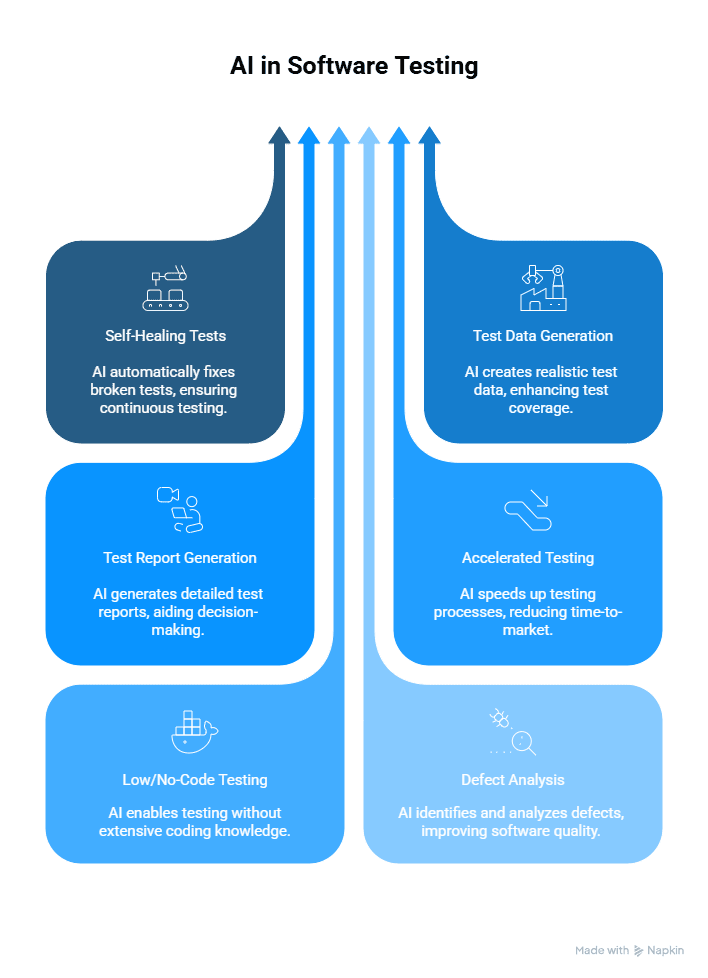

Real World Use Cases

The impact of AI on software quality assurance is transformative and practical. AI helps maintain test stability by generating smart automation tests and insightful test reports. It reduces manual testing and test documentation time by helping teams integrate faster automation.

Let’s look at these real-world use cases of artificial intelligence in software testing:

1. Self-healing tests

Self-healing tests are automated tests that can detect changes in the application UI and automatically adjust test scripts. This avoids failures caused by minor, non-breaking updates and keeps the automation suite up to date.

Here is how AI helps write reliable self-healing tests:

- AI algorithms and computer vision monitor DOM structures and identify changes in UI element locators.

- Machine learning (ML) and deep learning models are used to analyze complex DOM and suggest or automatically update locators, eliminating the need for manual intervention.

- Reduces test flakiness by constantly updating them whenever needed.

2. Test data generation

AI-driven test data generation involves using generative AI (genAI) and data augmentation techniques to create realistic test data. This data is not only diverse but also privacy-compliant for various test scenarios.

For test data generation, AI can assist in the following manner:

- Analyzes application schemas to generate valid, context-aware data automatically.

- Covers edge cases and rare inputs that might be missed by manual generation.

- Reduces dependency on production data, ensuring compliance with data privacy laws.

3. Test report generation

AI-powered test report generation utilizes natural language processing (NLP) and summarization algorithms to generate human-readable reports from unstructured test logs.

It is used to polish the test report generation process in the following manner:

- Techniques such as named entity recognition (NER) and sentiment analysis automatically separate failed test cases, root causes, and trends across runs.

- Translate logs into summaries with actionable tasks for testers and developers.

- Saves time spent manually sifting through logs or compiling reports.

4. Accelerated testing

Accelerated testing is the process of applying AI to accelerate test execution without compromising overall software quality, especially in continuous integration and deployment pipelines.

AI helps achieve faster testing in the following way:

- Using techniques like impact analysis and automated code coverage calculation, historical data is extracted to prioritize high-risk test cases.

- Predicts which parts of the application are most likely to break after a code change.

- Reduces test execution time by self-healing automation tests, enabling faster release cycles.

5. Low/No-code testing

Low- or no-code testing environments enable testers to write automated test cases without coding, typically using drag-and-drop interfaces, recording or playing frameworks, or natural language. A few such tools are Testim, Leapwork, Postman, and Katalon Studio.

AI helps write codeless test cases in the following way:

- Uses NLP to convert plain language into executable test scripts.

- Recognizes UI elements and maps them for test steps.

- Proposes test cases based on application usage patterns or user journeys.

6. Defect analysis

AI-based defect analysis uses machine learning techniques, including classification algorithms, clustering, and anomaly detection, to identify patterns of bugs and failures, determine their root causes, and predict future issues.

Here’s how AI assists in creating a comprehensive defect analysis:

- Reviews historical defects for common problem areas in the codebase.

- Aggregates similar defects together to avoid duplication and enhance triaging.

- Prioritizes bugs by severity, frequency, and user impact.

7. Regression automation

With AI techniques such as impact analysis, anomaly detection, and machine learning-based test selection, smart automation regression testing is performed. This includes discovering and verifying impacted areas of the application due to new code and executing automation tests on them.

Here’s how AI helps in regression automation:

- Generates automated regression tests out of user flows, along with historic usage data.

- Forecasts those test cases most likely to fail based on the recent code modifications.

- Increases regression coverage while decreasing test execution time.

Embracing AI as Part of Software Testing

When we have such detailed discussions on AI, a common question that arises is whether AI will replace software testing. It is similar to the age-old notion that automation will eliminate the need for manual testing. AI should be viewed as an ally in software testing, not a replacement.

To learn more, find details on the role of artificial intelligence in software testing here.

Using AI can help testers free up their time from repetitive and laborious tasks, allowing them to focus on more strategic initiatives, such as improving test coverage, designing complex test scenarios, understanding customer needs, and optimizing test processes.

The following are some approaches to recognizing AI as a part of software testing:

- AI as a testing partner: Testers can utilize AI for various tasks, including triaging and fixing bugs, generating and maintaining test cases, and data analysis, among others. However, human judgment is still necessary to understand test cases in light of user experience.

- Enhancing automation: AI can help and maintain large sets of automation test cases. It is capable of self-healing selectors and updating the scripts.

- Human judgment still matters: Human oversight and testing are necessary despite the advantages of using AI. This is essential to ensure that the product is usable for actual users and to prevent ethical issues such as bias or inappropriate content generation.

- Need for testers to adapt: Testers need to learn new skills for using AI technologies as they evolve. This is necessary to keep up with the fast pace of technological advancements. It includes learning about machine learning, using prompts effectively, analyzing AI-generated output, etc.

To sum it up, the future is collaborative. AI is here to stay and will be a permanent part of the testing process. Testers should learn to adapt to it and leverage it for their benefit.

Actionable Advice for QA Leaders and Organizations

Now that the importance of transitioning from conventional software testing to AI-powered testing is established, we turn to what top management needs to do to adopt AI successfully.

AI adoption is not an easy thing. If done in a hurry or adopted without a defined strategy, it is likely to create more issues than solutions. Here’s how companies can effectively adopt AI within their QA efforts:

- Start small, Scale smart: Start with AI-powered solutions that address clear pain points, such as flaky tests or data generation. These small wins inspire confidence and get buy-in among teams.

- Invest in skill development: Enable QA teams with the skills to collaborate with AI. Support upskilling in test automation, data analysis, and prompt engineering to help teams use AI tools effectively.

- Create a culture of experimentation: Invite teams to test new tools and learn from each other. Provide space for trial and error when trying out AI-based solutions.

- Align AI with business goals: Ensure that AI efforts are linked to measurable results, such as faster release schedules, improved test coverage, or reduced defect leakage. This supports investment justification and aligns QA activities with business value.

- Track and measure effect: Monitor key metrics, such as test coverage, defect detection rate, and time saved through automation. Use these insights to optimize your AI-driven QA strategy continuously.

- Data governance: Implement strong data governance policies to ensure the integrity, privacy, and security of the data used in AI-driven testing. This includes defining strict guidelines for data access, usage, and regulatory compliance to ensure trust and security during the AI adoption process.

If you are looking to integrate the best AI practices, tools, and technologies into your software testing process, look no further. Testlio can provide you with the right team to help you make a smooth transition from manual testing to AI-driven testing.

Contact our sales team to learn more!