Understanding Test Scripts: A Comprehensive Guide

When a new feature rolls out, the last thing any team wants is to deal with unexpected bugs slipping through testing.

A travel booking app might introduce an easy cancellation option, but without proper validation, users could end up facing incorrect charges or failed reschedules.

The issue? Incomplete testing. Even when a feature is tested, gaps in test coverage can cause real-world failures.

That’s where test scripts come in. They provide a structured way to validate software functionality, ensuring every critical scenario is accounted for before release.

In this article, you will learn what test scripts are and how they fit into the Software Development Lifecycle (SDLC).

You’ll learn the differences between manual and automated test scripts, the best practices for writing effective scripts, and how to overcome common challenges in test scripting.

Whether you’re a tester, developer, or project manager, this guide will help you build structured, reusable, and efficient test scripts that improve software quality and user experience.

What Are Test Scripts in Software Testing?

Test scripts are a set of instructions that guide testers through the process of verifying a software feature. They outline a clear sequence of actions to ensure that every function works as intended.

While test scripts are often linked to automated testing, they can also be used in manual testing to maintain accuracy and consistency across different testing environments. A single test case can have multiple test scripts designed for a different environment and scenario.

The Software Development Lifecycle (SDLC) heavily relies on test scripts to ensure software quality. Test scripts help teams identify defects early, optimize testing workflows, and incorporate them into CI/CD pipelines.

Despite their similarities, test cases and test scripts serve different functions. A test case defines the “what”—the scenario, objective, and expected outcome—while a test script defines the “how”—the exact steps needed to execute the test.

Furthermore, a test script can be either manual or automated. Automated scripts are often written to generate test data and execute repetitive test scenarios efficiently.

While automation speeds up testing, manual scripts remain useful for functional testing and situations where human evaluation is necessary.

Popular scripting languages like Python, Java, Ruby, and Javascript are commonly used for writing automated scripts.

Why Should You Use Test Scripts?

Test scripts are a key part of software testing because they provide a structured, repeatable way to verify whether a system works as expected.

Without them, there is a risk of inconsistencies in testing and the potential of missing crucial details.

Here’s why they matter:

- Covers All Critical Steps: A well-written test script ensures that no necessary steps are skipped during testing. It helps testers check everything from basic functionality to edge cases.

- Ensures Software Meets User Needs: When user requirements are precise, test scripts help verify that the software behaves precisely as expected. This is especially important for business-critical features that need to work flawlessly.

- Makes Testing More Consistent: Different testers might take different approaches without structured test scripts. Scripts keep testing uniform, so the same steps are followed every time, regardless of who runs the test.

- Removes Guesswork: Instead of testers making assumptions, test scripts provide clear instructions on what to test, how to test it, and what the expected outcome should be.

- Minimizes Human Errors: A well-documented test script prevents mistakes, ensuring no test case is overlooked. This helps catch bugs early, saving time and effort later in development.

How to Write a Test Script

Depending on their complexity, there are several ways to create automated test scripts.

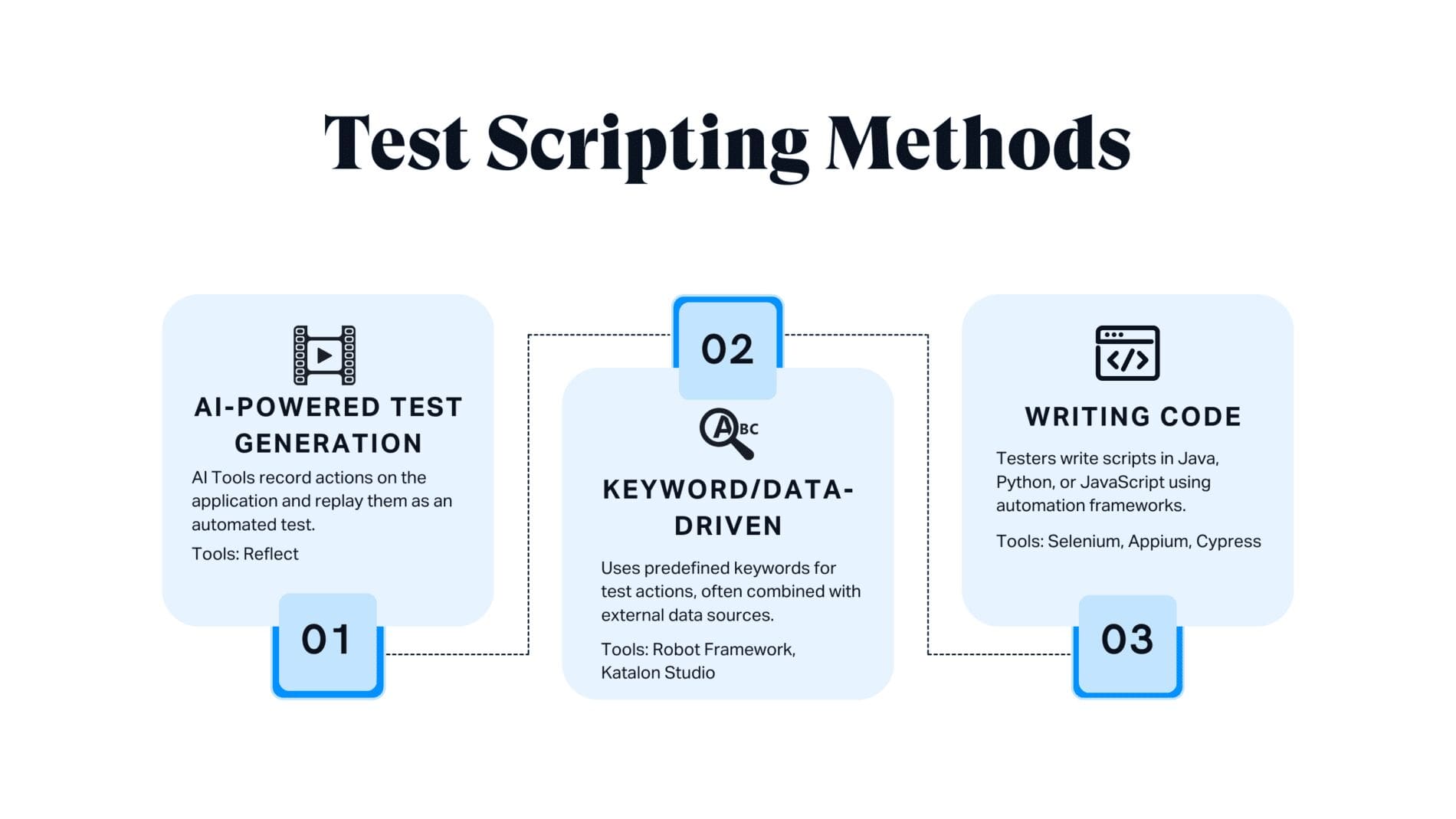

The three most common approaches are record/playback, keyword/data-driven scripting, and writing code manually in a programming language.

AI-Powered Test Generation

With this method, instead of relying on record/playback, where user actions are manually recorded and replayed, modern tools like Reflect (SmartBear) leverage AI to streamline the process. These tools simplify capturing user interactions and generating scripts without requiring testers to write code.

The system recognizes UI elements, stores actions, and plays them back exactly as recorded.

Although recording user interactions is straightforward, validating test results requires additional effort. Testers must manually insert validation steps to check whether the application behaves as expected.

For instance, if a test checks whether a welcome message displays a user’s name, AI-powered tools don’t inherently verify it. Instead, testers must specify checks for elements like text values, UI components, and expected outputs.

Most AI-powered script generation tools produce scripts in languages like JavaScript, offering flexibility for advanced testers. Experienced users usually modify the script manually to enhance reliability and make it more adaptable to UI changes.

Keyword/Data-Driven Scripting

This approach organizes tests using predefined keywords that represent user actions. Instead of writing code for every test step, testers input keywords in a structured format, and the automation framework generates the script.

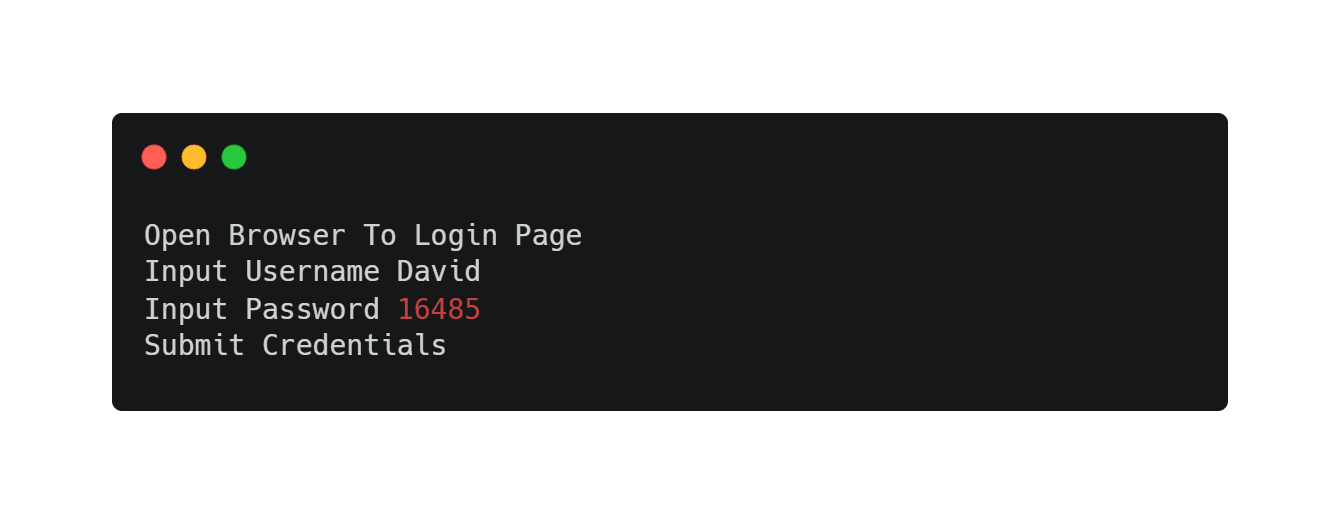

For example, a keyword like “login user” could instruct the script to open the login page, enter credentials, and submit the form. A tester provides data in a table format:

| Username | Password | Action |

| David | 16485 | login user |

Or in a simple text script:

The test automation framework then executes these steps based on predefined logic.

A single keyword, such as “login user,” handles simulation, while validation requires separate keywords to check various UI elements, like verifying whether the login was successful.

If multiple UI elements need to be tested, each requires a unique keyword, such as user ID, last login date, and account type.

A variation of this approach, data-driven testing, runs the same test multiple times with different data sets.

Testers do not need to write code to use keyword/data-driven scripts, making them accessible to non-developers. Robot Framework is a well-known tool that supports this method.

Writing Code in a Programming Language

Testers can write scripts using Java, Python, C++, JavaScript, or other programming languages for greater flexibility. Many automation frameworks, like Selenium (for web apps), Appium (for mobile apps), and Microsoft Coded UI, allow testers to interact with the application programmatically.

With this approach, testers write code to perform actions like clicking buttons, filling out forms, and navigating through screens. The simulation part is straightforward, as frameworks provide built-in commands for interacting with the UI.

However, validating results is more complex. Unlike record/playback tools, automation frameworks don’t automatically check for correct outputs.

Testers must write custom assertions to compare expected and actual results. For example, an eCommerce test might need to verify that a calculated price follows business rules, requiring additional logic.

Since validation often requires checking multiple UI elements, it makes up the majority of a test script’s code. To maintain efficiency, teams often use modular scripting techniques and reusable components.

Choosing the right approach depends on the complexity of the application, team expertise, and long-term test maintenance needs.

Key Components of Test Scripts

A well-structured test script ensures a clear, consistent, and repeatable testing process. By following a standardized format, teams can save time, maintain accuracy, and smooth test execution.

Here’s what goes into a well-defined test script:

1. General Information

This section includes the basic details about the test script, making it easier to track and manage.

- Test Case ID: A unique identifier for the test (e.g., TC002-Checkout).

- Test Title: A short, descriptive name (e.g., Verify Successful Checkout Process).

- Module/Feature: The feature under test (e.g., Shopping Cart, Payment Gateway).

- Test Type: Functional, Regression, Security, or Performance.

- Priority: High, Medium, or Low (based on business impact).

- Tested By: The tester running the script.

- Test Date: When the test was executed.

- Version: The software version that is under test.

2. Prerequisites / Pre-conditions

Before running the test, certain conditions must be met to ensure accurate execution.

- The user must be logged in with an active account.

- The cart should contain at least one item for checkout tests.

- Payment method (e.g., credit card, PayPal) must be configured.

- Internet connectivity should be stable for real-time transactions.

These conditions help testers avoid unnecessary failures due to incorrect setup.

3. Test Steps

This section outlines the step-by-step actions to execute the test. Each step specifies what the tester needs to do and the expected outcome.

| Step No. | Action/Description | Expected Result |

| 1 | Open the browser and navigate to the checkout page. | The checkout page should load successfully. |

| 2 | Select a payment method (e.g., credit card). | The selected method should be highlighted. |

| 3 | Enter payment details and click “Pay Now.” | The transaction should be processed. |

| 4 | Verify that a confirmation message appears. | A success message like “Order placed successfully!” should be displayed. |

| 5 | Check that the order details are recorded. | The order should be visible under “My Orders.” |

Each step should be clear and easy to follow so that multiple testers can execute it without confusion.

4. Test Data

Test data ensures coverage of different scenarios, including valid and invalid inputs.

| Field | Input Data | Type |

| Email Address | [email protected] | Valid Data |

| Card Number | 4111 1111 1111 1111 | Valid Data |

| Expiry Date | 12/25 | Valid Data |

| CVV | 123 | Valid Data |

| Invalid Card | 1234 5678 9012 3456 | Invalid Data |

5. Expected Result

This section defines what should happen when the test is executed.

- The checkout should be successful, and an order confirmation should be displayed.

- If the card details are incorrect, an error message should appear.

- The order should be recorded in the order history.

6. Actual Result

After running the test, the actual results are compared to the expected results.

| Step No. | Actual Result | Status (Pass/Fail) | Comments |

| 1 | The checkout page loaded successfully. | Pass | – |

| 2 | Payment method selection worked. | Pass | – |

| 3 | Error message displayed for the invalid card. | Fail | Card validation issue |

| 4 | The order was not placed. | Fail | Payment gateway issue |

| 5 | No order found in history. | Fail | Failed due to payment error |

If any step fails, details should be logged with error messages, screenshots, or logs to help debug the issue.

7. Postconditions

This outlines the system state after the test is completed.

- If the transaction was successful, the order should remain in the database.

- If the test fails, no duplicate transactions should be recorded.

- Any temporary test data should be removed (e.g., test payment entries).

These conditions ensure the test environment remains clean and stable for future tests.

8. Pass/Fail Criteria

A test is marked Pass if all steps meet the expected outcome.

A test is marked Fail if:

- An error prevents a successful checkout.

- Unexpected behavior is observed.

- System performance significantly slows down.

9. Notes / Additional Information

This section includes observations, challenges, or additional test conditions.

- If a failure occurs, include any screenshots or logs for debugging.

- Mention any environment-related issues (e.g., payment gateway downtime).

- Add any suggestions for improving test execution.

Types of Scripts

Test scripts fall into two main categories: manual and automated. Each performs a different role within the testing process, depending on the application’s complexity, the testing environment, and the available resources. Let’s talk about each type:

Manual Test Scripts

Manual test scripts are written and executed by testers without using automation tools. They follow a predefined set of steps to manually validate an application’s functionality.

This approach is beneficial for exploratory, usability, and ad hoc testing, where human intuition is crucial for identifying unexpected issues.

Although manual testing requires more time and effort, it excels at uncovering usability concerns, UI inconsistencies, and real-world user behavior that automation might miss.

One of the biggest advantages of manual test scripts is their flexibility. It is ideal for testing UI/UX issues, accessibility concerns, and complex user interactions because testers can adjust their approach based on real-time observations.

However, manual testing can be time-consuming and prone to human error, especially for large-scale applications. It also does not scale well for repetitive tasks, slowing down the testing process.

Automated Test Scripts

On the other hand, automated test scripts are written in programming languages and executed with test automation tools.

These scripts simulate user interactions, validate system responses, and run repetitive tests efficiently. Automation is ideal for frequent regression testing, large-scale test execution, and scenarios where speed and accuracy are critical.

Within automated testing, different types of scripts serve various functions. Unit tests catch early bugs, integration tests ensure modules work together, and functional tests verify business requirements.

Regression tests prevent new updates from breaking features, while performance tests check system behavior under load. Each type helps maintain reliable and secure software.

However, automation isn’t without challenges. Writing and maintaining scripts requires coding skills, which can be a hurdle for teams with limited programming expertise.

Scripts also need regular updates to keep up with UI changes, and flaky tests—where minor UI shifts cause failures—can be a common frustration. While automation accelerates testing, it can’t replace manual testing for exploratory, usability, or edge cases requiring human judgment.

A variety of tools cater to different automation needs. Selenium is widely used for web applications, while TestNG and JUnit provide structured frameworks for Java-based testing.

Appium supports mobile automation across iOS and Android, and modern tools like Cypress and Playwright offer robust solutions for end-to-end web testing.

Example of a Well-Written Test Script

Now that we’ve covered what test scripts are, let’s examine how they are written. We’ll look at both a manual and automated test script to show how structured scripts ensure thorough testing.

Manual Test Script Example

This example tests whether a user can successfully upload a PDF file to a web application.

Test Script Title: Verify that a user can upload a PDF file.

Preconditions:

- The user must have valid login credentials (Username: testUser, Password: testPassword).

- The application must be accessible via a web browser.

Test Steps:

- Open the web application in a browser.

- Log in using valid credentials.

- Navigate to the File Upload section.

- Select a PDF file from the system.

- Click the Upload button.

- Verify that a success message appears confirming the upload.

Expected Result:

The system should successfully upload the PDF file and display a confirmation message.

This structured manual script ensures each step is followed precisely, making reproducing tests across different environments and testers easier.

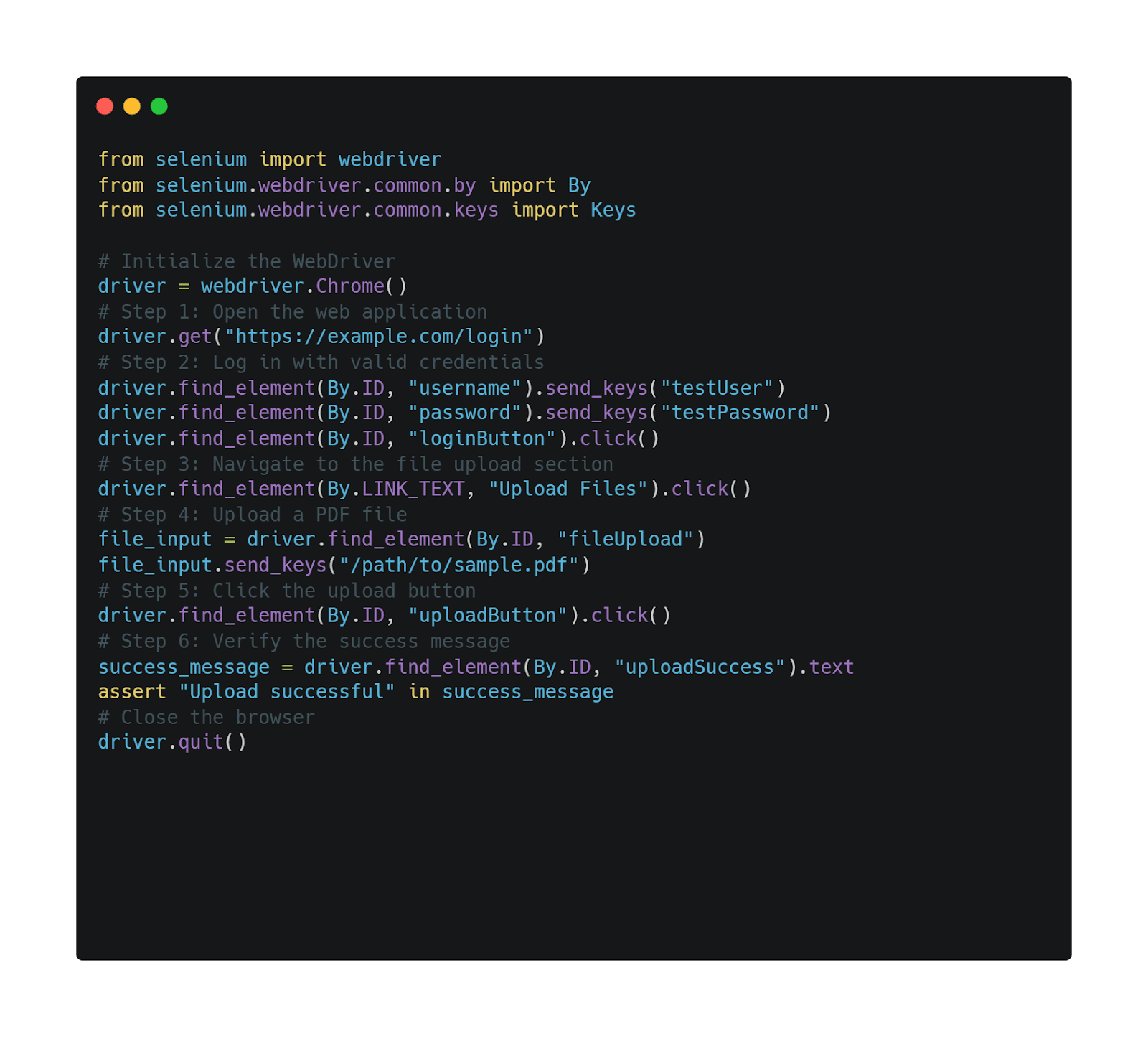

Automated Test Script Example (Selenium + Python)

Automating this test case using Selenium can make the process faster and more efficient.

Expected Result:

- The script automatically logs in, uploads the file, and checks for a success message.

- If the test passes, it confirms that the upload feature works correctly.

- The test will fail if the success message is missing, signaling an issue.

Both manual and automated test scripts ensure a structured approach to verifying functionality.

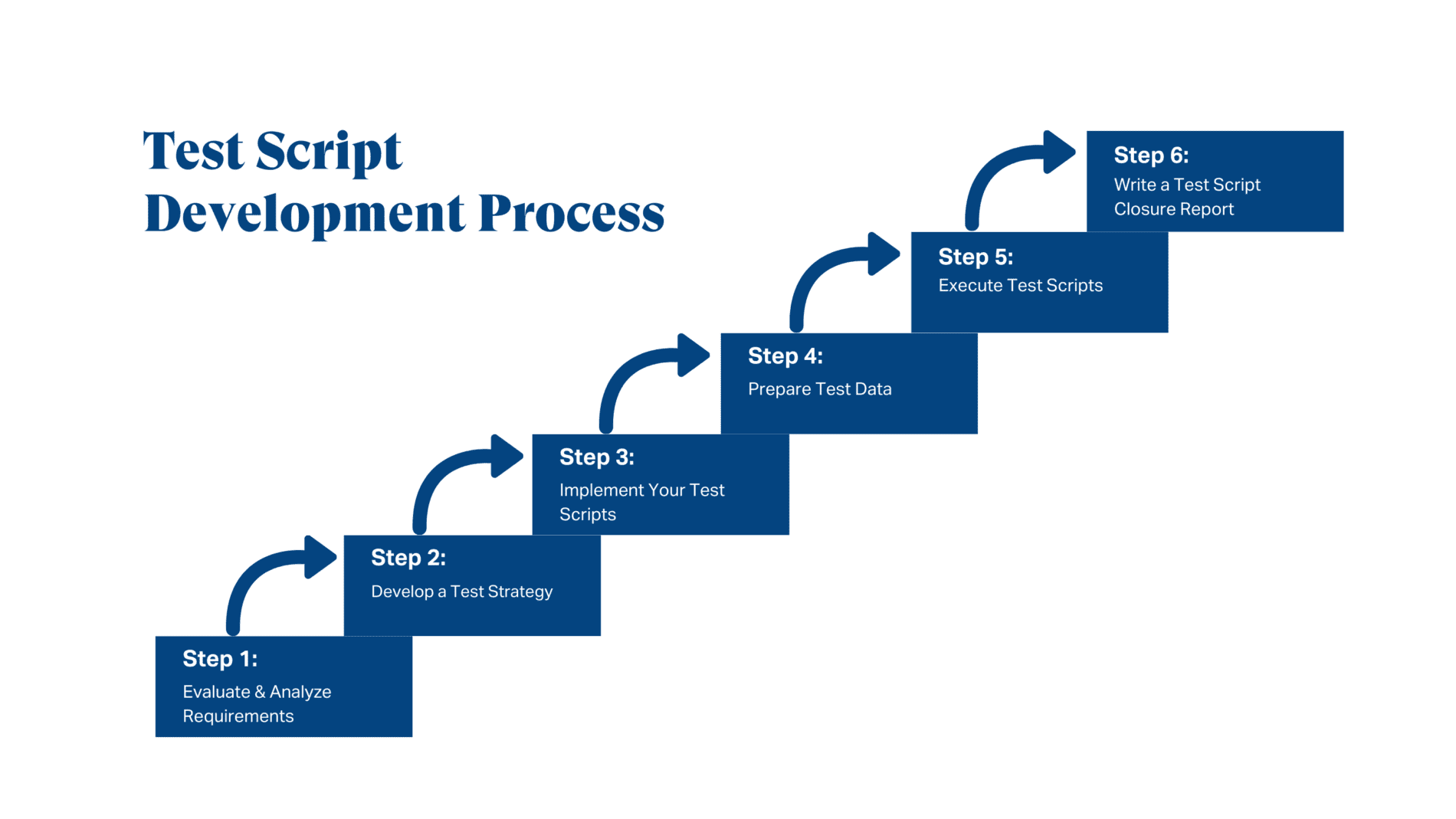

Test Script Development Process

Effective test scripts follow a structured process to ensure all testing requirements are met. Each phase is crucial in developing reliable, maintainable, and efficient scripts.

Requirement Gathering and Analysis

Before writing any test scripts, testers collaborate with stakeholders like product owners, developers, and business analysts to understand the software’s requirements.

This phase is critical for identifying functionality, performance expectations, and behavior that need to be tested.

Testers analyze the system to pinpoint testable scenarios, potential risks, and specific conditions that should be covered in the test scripts.

Test Design and Planning

Once the requirements are clear, the next step is to define a testing strategy. This involves outlining test objectives, scope, testing approaches, and key scenarios.

The goal is to ensure the script covers all necessary test cases, including core functionalities, edge cases, and integration points.

A well-planned test design helps reduce redundancies and ensures that no critical aspect is overlooked.

Test Script Implementation

At this stage, testers create test scripts using scripting languages and automation tools. These scripts contain commands to simulate user actions such as clicking buttons, entering data, and verifying results.

Depending on the project’s needs, scripts may be designed for manual execution or automation frameworks to run them repeatedly.

Test Data Preparation

Test scripts need relevant data to validate different scenarios to be effective. This includes boundary values, valid and invalid inputs, user roles, and system states.

Well-prepared test data ensures scripts test realistic conditions and identify potential issues that might occur in a live environment.

Test Script Execution

Once everything is set up, the test scripts are executed in various environments and configurations.

The system’s responses are monitored closely to identify deviations between expected and actual outcomes.

This phase helps validate whether the application behaves as intended under different conditions.

Test Script Closure

After execution, a test closure report summarizes the testing process. This includes details on which tests were run, results, pass/fail statuses, and any defects found.

As a result, teams can assess testing effectiveness and use it as a reference to improve future testing.

Challenges in Test Scripting

As software evolves, test scripts can break, become unreliable, or fail to keep up with product changes. If not managed well, they can lead to frustration, wasted effort, and missed defects.

Let’s look at some of the biggest challenges in test scripting and how to tackle them.

Keeping Test Scripts Up to Date

Software is constantly changing, and test scripts need to keep up. They can quickly become outdated if not regularly updated, leading to false failures and unreliable results. During one of my early projects, a simple missed step in our test script caused a major production issue, teaching me how critical it is to keep test scripts up to date!

The best way to avoid this is to review and refine test scripts regularly. A modular test design can also help, making updating individual test components easier than rewriting entire scripts.

Version control and CI/CD integration can streamline test maintenance by tracking changes alongside software updates.

Handling Complex Test Scenarios

Some applications are easy to test, but others involve multiple user actions, complex workflows, and dependencies on external systems. Writing scripts for these scenarios can quickly become messy.

A data-driven or parameterized approach can help handle variations efficiently, reducing the number of hardcoded tests.

Synchronization techniques, like explicit waits, can also prevent failures caused by timing issues in dynamic applications.

For highly complex cases, automated and manual exploratory testing ensures nothing slips through the cracks.

Dealing with False Positives and False Negatives

Nothing is more frustrating than tests failing when nothing is wrong (false positives) or passing when a defect is present (false negatives).

These issues usually stem from unstable environments, incorrect test assertions, or flaky test scripts.

Use dynamic waits instead of fixed time delays to minimize these problems and ensure that test assertions are well-defined to minimize them.

Limited Resources for Automation

Not every team has unlimited time, budget, or infrastructure for automated testing. Many struggle with limited test environments, lack of automation expertise, or inadequate computing power for large-scale tests.

Instead of automating everything, focus on the highest-value test cases that will yield the most benefit.

The use of cloud-based testing platforms can help scale resources without heavy infrastructure investments, and test automation can improve efficiency over the long haul.

Ignoring Edge Cases

It’s easy to focus on the “happy path” when writing test scripts, but real users don’t always follow the script. Ignoring edge cases can leave serious defects undiscovered.

System test scenarios should include unexpected inputs, unusual scenarios, and failure conditions like entering special characters, handling large amounts of data, and testing system limits.

A boundary test, negative test, and randomized input test can reveal problems that aren’t evident in basic testing.

Poor Documentation

A test script without documentation is like a recipe without instructions. It might work, but only if you know exactly what to do. Poorly documented scripts can lead to confusion, wasted time, and dependency on specific testers.

Test scripts can be easier to understand and modify by including clear comments, maintaining a centralized test repository, and writing self-explanatory code.

New team members can pick up where others left off, keeping testing smooth and efficient.

Best Practices for Writing Clear and Concise Test Scripts

Now that you know how to write a test script manually and using automation tools, it’s just as important to follow best practices to get the most value out of them.

Here are some key practices to keep in mind:

Make Sure They’re Clearly Written

A test script should be easy to understand so that no important steps are missed during testing. If testers need to ask for clarification repeatedly, it wastes time and effort.

A script should be clear for QA engineers and anyone involved in the testing process.

Write Targeted Test Scripts

Each test script should focus on a single action or scenario to keep testing structured. If you’re writing a test script for a successful login, don’t mix it with tests for failed logins.

Instead, create separate scripts for each case to avoid confusion and ensure every condition is tested properly.

Think from the User’s Mindset

Users rarely follow a straight path, so test scripts should cover both expected and unexpected scenarios.

Consider different ways a user might interact with the software and plan tests that check for possible mistakes, incorrect inputs, and unusual behaviors.

Include Comments Within Test Script

Just like in coding, comments help explain the purpose of each test step. This makes understanding the script, troubleshooting issues, and onboarding new testers easier without unnecessary guesswork.

Implement Version Control

Test scripts evolve as software changes, and version control helps keep track of updates. This makes it easier to collaborate, roll back to previous versions, and maintain script integrity.

User Parameterization

Instead of hardcoding values, use parameterization to make test scripts adaptable across different environments.

This is especially useful in automated testing, where the same script can be reused with different data sets, reducing effort and improving efficiency.

Conclusion

A well-structured test script is the foundation of reliable software testing. It ensures consistency, reduces human error, and helps teams catch defects before they impact users.

Whether you’re using manual test scripts for exploratory testing or automated scripts for large-scale execution, having a clear and reusable approach can significantly improve software quality.

Teams must navigate challenges such as updating test scripts, handling complex test scenarios, and avoiding pitfalls like false positives or poor documentation.

However, with the right strategy, tools, and expertise, organizations can build a robust testing framework that enhances software reliability and user experience.

If you’re looking to optimize your test scripting process, Testlio can help. Our expert testers and advanced testing platform ensure seamless, high-quality software testing across multiple devices and environments.

Contact us today to take your test automation and scripting to the next level!