Big Data & Software Testing: A Complete Guide

From social media and Google reviews to sensors and artificial intelligence (AI) assistants, development teams today have access to so much user data, often called big data, that it sometimes feels like a blessing and a curse.

This user data consists of structured and unstructured data from various sources that require big data software testing to ensure that big data is processed and analyzed effectively.

Big data software testing is vital for informed software testing decisions and to ensure that organizations meet user needs for flawless experience. However, it can be challenging to incorporate big data insights effectively into testing processes for better outcomes.

This guide explains the challenges and opportunities of big data software testing and analytics to ensure high-quality app experiences and their impact on the future of software quality assurance.

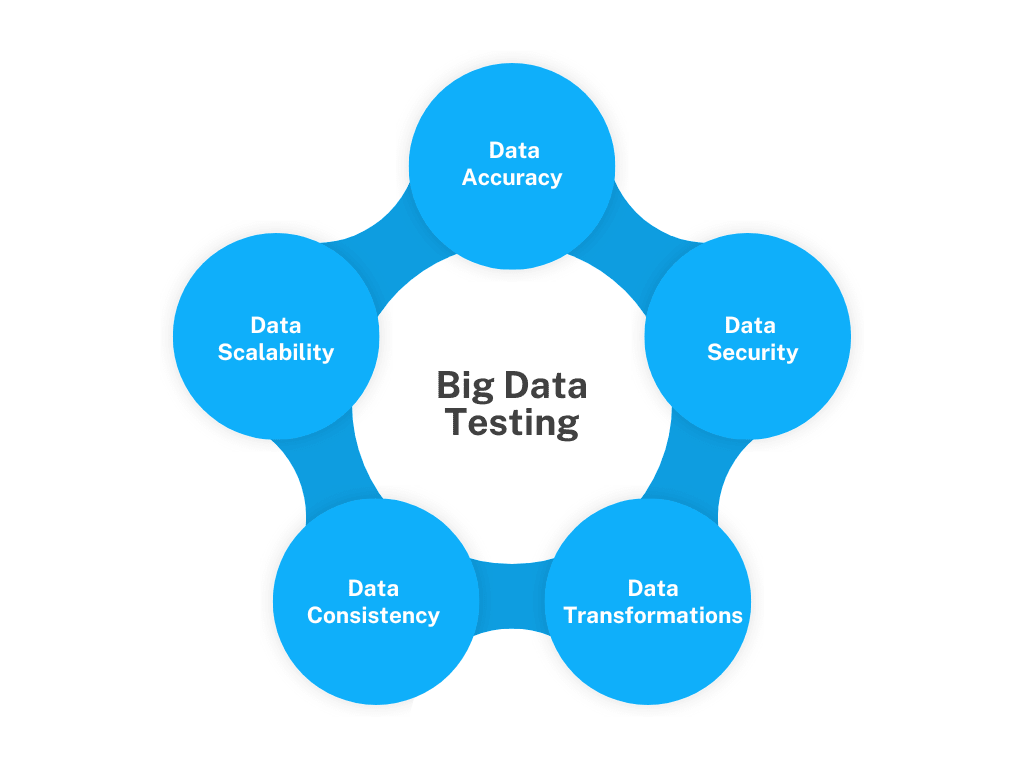

What is Big Data Testing?

Big data software testing is the process of checking whether a system correctly processes, stores, and analyzes large amounts of data.

Unlike traditional software testing, big data software testing deals with massive datasets, structured (like databases) or unstructured (like social media posts or logs).

In big data software testing, the data can range from databases and spreadsheets to user data like social media posts, Internet of Things (IoT) device logs, or multimedia files. The goal is to ensure the data is accurate, reliable, and efficiently processed across clusters and nodes.

Moreover, big data software testing ensures that data retains its integrity throughout the user journey from input to output.

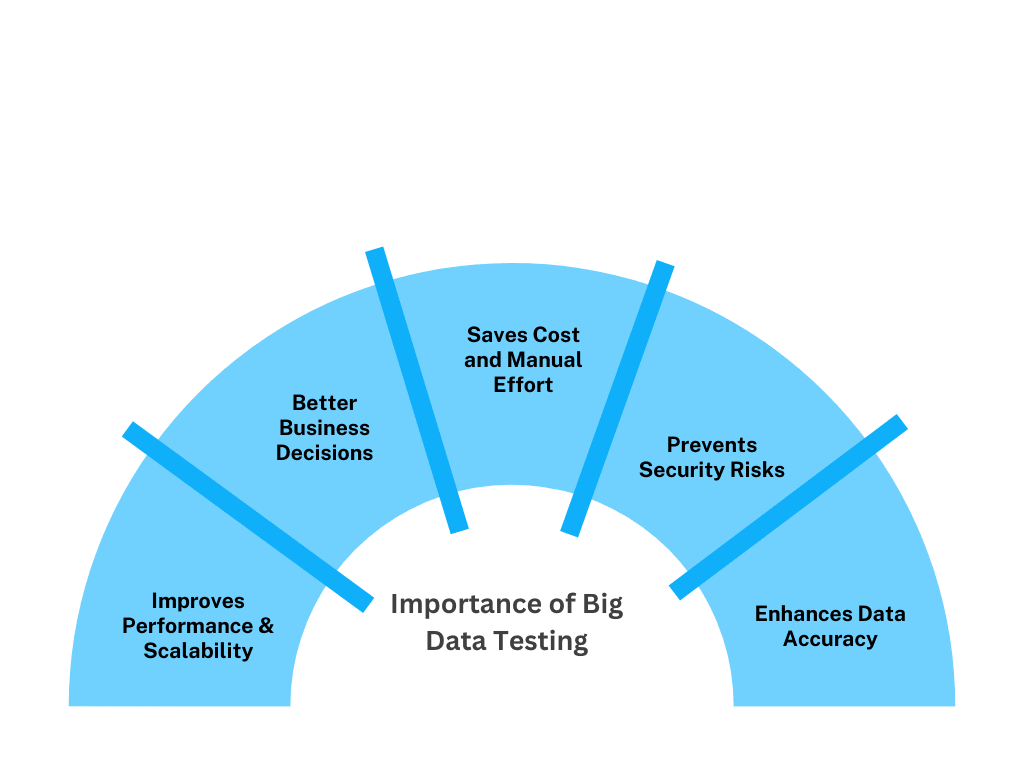

Why is Big Data Testing Important

According to IDC, the global data sphere will reach 181 zettabytes by 2025. This is a significant jump from 120 zettabytes in 2023. As data grows exponentially, ensuring its quality is becoming increasingly important.

Big data testing validates that the systems can handle huge amounts of data without compromising accuracy, security, and performance.

More importantly, big data testing enables companies to make better decisions by ensuring the data reliability and scalability needed to properly use big data insights to their advantage.

Some key benefits of big data software testing are:

- Cost savings: Data errors in production can be costly and damage a reputation. Big data software testing helps detect anomalies early and saves time and money.

- Better decision-making: Unknown system data, such as website logs, transaction histories, etc., can lead to bad decision-making. Testing big data verifies that this data is accurate and known to enable data-backed decisions.

- Improved performance: Testing systems on higher loads of data ensures that systems continue functioning efficiently even when the data volume expands. This helps teams identify potential bottlenecks associated with large data inputs.

- Enhanced data accuracy: From data ingestion (input) to analysis (output), big data software testing enhances data quality. It ensures that data is structured, processed, and formatted.

- Prevent security risks: Big data software testing guarantees systems comply with privacy regulations like GDPR or HIPAA. It verifies encryption, access controls, and data privacy measures to protect against unauthorized access.

How Does Big Data in Software Testing Lead to Improved Products?

Incorporating big data analytics into software testing leads to improved products, enhanced testing efficiency, and more informed decisions. It helps create more user-centric digital experiences by identifying previously hidden patterns and trends, performance bottlenecks, and potential areas of improvement.

Here’s how big data insights can improve testing outcomes:

User Sentiment Through Reviews

User reviews on platforms like social media and Google provide invaluable sentiment data. Analyzing this data helps identify common pain points and areas of satisfaction. By understanding user emotions and opinions, teams can prioritize issues significantly impacting user experience.

Optimizing test cases based on priority ensures your testing resources are utilized more efficiently. This reduces testing time and costs and allows you to allocate resources to other critical activities.

User Usage Patterns Through Analytics

Analytics from user interactions offer insights into how users engage with your application. This includes identifying frequently used features, navigation patterns, and areas where users encounter difficulties. By examining these usage patterns, your team can design tests that mirror real-world user behavior, ensuring that the most critical paths are thoroughly evaluated.

Comprehensive and Insight-Driven Testing

You can achieve comprehensive testing by combining sentiment analysis with usage analytics. Here are the key areas where big data analytics can help inform testing:

- Device Testing that Mimics User Usage Patterns: Test cases informed by real-world usage patterns ensure that applications perform optimally across preferred devices by simulating user interactions. This approach helps identify and resolve issues that users might face on different devices.

- Signal-Driven Testing with AI: Leveraging AI to evaluate user sentiment can guide the focus of testing efforts. AI-driven testing can prioritize quality signals such as negative feedback or high user activity, using intelligently curated rules to ensure these aspects receive the necessary attention and improvement.

Challenges of Testing with Big Data

While incorporating big data into testing has benefits, teams must understand and overcome its unique challenges to ensure success. Big data is characterized by the 3Vs: volume, velocity, and variety, which present certain challenges.

Volume

Modern applications need to be able to process terabytes or even petabytes of data generated by millions of users. This data is a powerful way to identify user patterns and behaviors, which are then used as inputs to create test scenarios or to train AI to suggest test scenarios.

Velocity

Every user interaction, such as swipes, clicks, and reactions, generates critical data from multiple sources. Testing teams must leverage advanced tools and techniques to analyze this data rapidly to ensure these inputs are quickly turned into meaningful test scenarios.

Variety

Big data comes in diverse formats: text, images, videos, and sensor data. The challenge is not necessarily in how the apps process these data types but in ensuring that the right user inputs are sourced correctly. Testing teams must use thorough test coverage and specialized tools to handle various data formats and extract valuable insights from user behaviors.

Strategies for Ensuring High Quality

So, how can you incorporate big data insights into your testing processes? Here are some recommended strategies.

Test Using Real-World Data

To ensure that big data delivers value, it is essential to use real-world insights to simulate user behavior and identify potential issues. Creating realistic and meaningful test scenarios guarantees your application performs well under real-world conditions and covers the most critical and common user journeys.

It’s crucial to combine data-driven test scenarios with in-location testing for essential features. While some functionalities are easy to test in simulated environments, conducting tests with localized resources is critical to ensure your app performs as expected under different environments.

Since managing in-field resources is time-consuming and costly, consider a crowdsourced testing vendor like Testlio to ensure your app is thoroughly tested in simulated and real-world conditions.

Invest in Automation Testing

With the complexity and scale of big data insights, manual testing becomes impractical for repeatable and highly stable test cases. Automated tests increase efficiency and allow teams to leverage big data analytics more effectively. Here’s how:

- More Comprehensive Test Coverage:

By identifying common user behaviors and patterns, teams can automate testing for critical user paths to ensure comprehensive coverage and more accurate simulation of user scenarios. This includes identifying and fixing performance bottlenecks, usability issues, and other critical factors that affect how users interact with the application. - CI/CD Integration:

Seamless integrations with CI/CD pipelines allow for continuous testing and rapid feedback cycles. By continuously analyzing user data and feeding this information into automated tests, teams can ensure each new build is evaluated against the latest usage patterns and user feedback. - Improved Efficiency:

Automated testing driven by big data insights can significantly reduce testing time and costs. Organizations can avoid unnecessarily testing low-priority areas by prioritizing automation based on user impact and usage patterns.

Implement Next-Gen Technologies

AI-powered testing is crucial in the age of big data. It can analyze large datasets to identify patterns and anomalies, which can help detect issues that traditional testing methods might miss. AI can flag quality signals generated from testing activities, predict failures, and recommend preventive measures, improving the overall quality and reliability of applications.

However, effective use of AI still requires human intervention. Therefore, finding the right balance between human input and machine assistance is crucial for successful implementation.

Here at Testlio, our AI-enhanced testing solution combines human intuition from our extensive vetted network of experts with the speed and efficiency of machines. This ensures smarter decision-making and higher-quality products at a greater speed, efficiency, and scale.

Big Data Testing Tools

Big data software testing tools identify defects in data quality and performance. Teams must first understand their system’s requirements to choose the right tool.

QA teams consider data architecture, scalability, and the speed needed for real-time processing when selecting the big data software testing tool. This ensures that the selected tools meet the organization’s specific needs.

Let’s take a look at common big data software testing tools and their key features:

1. Apache Hadoop

Apache Hadoop is one of the most popular frameworks for handling large-scale data processing.

Hadoop is crucial in testing data pipelines, enabling testers to simulate large data sets across multiple nodes to ensure distributed data quality.

Some key features of Apache Hadoop are:

- Offers distributed storage and computational capabilities across clusters.

- Provides scalable architecture.

- Supports real-time analytics when integrated with tools like Apache HBase.

2. Apache Spark

Apache Spark is known for its speed and efficiency in handling batch and real-time data.

It simulates real-time data flows and quickly processes large datasets to test data transformations and aggregations.

Some key features of Apache Spark are:

- Provides in-memory data processing for faster performance.

- Supports both batch and real-time data.

- Compatible with Hadoop for storage.

- Has built-in APIs for complex data workflows.

3. HP Vertica

HP Vertica is a columnar database management system designed for big data analytics.

It tests big data warehouses and performs analytical queries on large datasets.

The following key features distinguish HP Vertica:

- Contains a columnar storage architecture for efficient querying.

- Provides high-query performance for large data.

- Handles high data ingestion rates.

- Has Compression for better storage management.

4. HPCC (High-Performance Computing Cluster)

HPCC Systems is an open-source data processing platform that uses ECL (Enterprise Control Language), a high-level programming language, to facilitate data processing.

HPCC is used to test complex data workflows at high speed.

Key features of HPCC are:

- Faster data processing than Hadoop for specific cases.

- Scalable across large clusters.

- Open-source and adaptable to different environments.

5. Cloudera

Cloudera provides a commercial distribution of Apache Hadoop, offering additional features for big data management.

It can be used for data processing, testing data pipelines, managing data lakes, validating data security, and machine learning.

Some key features of Cloudera are:

- Most useful for data analytics and machine learning.

- Integrated with Hadoop, Spark, and Kafka.

- Provide strong security and governance features.

- Provide scalable data storage and processing.

6. Cassandra

Apache Cassandra is a highly scalable NoSQL database designed to manage large amounts of data across multiple servers.

Testers use Cassandra to validate data consistency, scalability, and availability across multiple nodes.

The following are the main key features of Cassandra:

- Provides decentralized architecture for no single point of failure.

- Scales up by adding more nodes.

- Supports large volumes of structured and semi-structured data.

7. Storm

Apache Storm is an open-source real-time computation system. Testers use Storm to validate the efficiency and accuracy of real-time data processing across various applications.

The following are the main key features of Storm:

- Supports complex event processing.

- Provides high throughput and low latency.

- Processes data streams continuously, allowing immediate analysis.

- Scales easily by adding more nodes to the cluster.

The Future of Software Testing and Big Data

The future of big data brings both challenges and opportunities for software quality. As software testing methods advance to incorporate advanced AI and machine learning techniques like predictive analytics, teams can anticipate and address issues before they affect users.

Furthermore, with the increasing popularity of edge computing, where data is processed closer to its source, testing strategies must evolve to ensure quality and performance at the edge. This will require new tools and frameworks for processing distributed data and providing real-time insights.

By embracing innovative testing strategies, leveraging real-time user insights, and harnessing advanced technologies, organizations can guarantee that their applications deliver high-quality, reliable, and secure user experiences.

With Testlio‘s expertise and comprehensive testing solutions, organizations can confidently navigate the complexities of big data analytics and achieve excellence in software quality.

Talk to a member of our team today to learn how we can help you make software testing more effective and efficient.