How to Write Test Cases

25% to 35% of a software testing team’s time is spent on writing and maintaining test cases. Yet, poorly written or incomplete test cases can lead to missed defects, inefficient testing, and costly rework.

After investigating, the development team realizes the issue wasn’t caught in testing because the test case for password reset didn’t account for special characters in usernames.

A simple oversight led to frustrated users, negative reviews, and emergency fixes. This kind of issue is more common than you might think.

So how do you write test cases that are clear, structured, and improve software quality?

This guide will take you through the step-by-step process of writing practical test cases, from defining test scenarios to structuring test steps and expected results.

You’ll learn best practices and techniques for different types of testing and how to optimize your test cases to save time, improve test coverage, and reduce software failures before they reach production.

What are Test Cases?

A test case is a complete set of conditions and steps to check if a part of the software works correctly. Simply, it tells you what to test, what data to use, and what the expected result should be.

As a tester, it helps you ensure the software behaves as expected in different situations. However, your focus should be on writing clear and well-structured test cases.

This practice ensures consistent testing, makes it easier to find bugs, and helps teams understand how to write test cases in testing.

There are many types of test cases, like functional, UI, and performance, each serving a different purpose.

Good test cases directly impact the efficiency and effectiveness of testing. If you know how to write a test case, you’re more likely to find defects early and reduce bugs in production.

On the other hand, if you write poor test cases, they can cause confusion, missed scenarios, or incorrect results, which can hurt software quality.

Poorly written test cases might result in lower test coverage, missed defects, and a higher defect density, ultimately leading to more issues in production.

Essential Components of a Test Case

Each test case should include a standard set of components to capture all necessary details.

Here are the essential components of a test case and what each means:

- Test Case ID: A unique identifier for tracking the test case, such as TC-Login-001.

- Title/Name: A brief, descriptive title summarizing the test scenario, like “Verify user login with valid credentials.”

- Objective/Description: A short explanation of the test case’s purpose and scope, detailing what you are testing and why.

- Preconditions: Setup conditions or prerequisites needed before test execution, such as system state or data setup.

- Test Steps: A clear, step-by-step sequence of actions to perform, each step being precise and atomic.

- Test Data: Specific input values or data required for the test, ensuring repeatability and consistency.

- Expected Results: The expected outcome after executing the test steps, described in specific and observable terms.

- Postconditions: The state of the system after test execution, including any necessary cleanup or reset actions.

- Actual Results: What actually happened when the test was run, recorded during test execution.

- Status: Pass/Fail status after running the test, indicating whether the actual results matched the expected outcome.

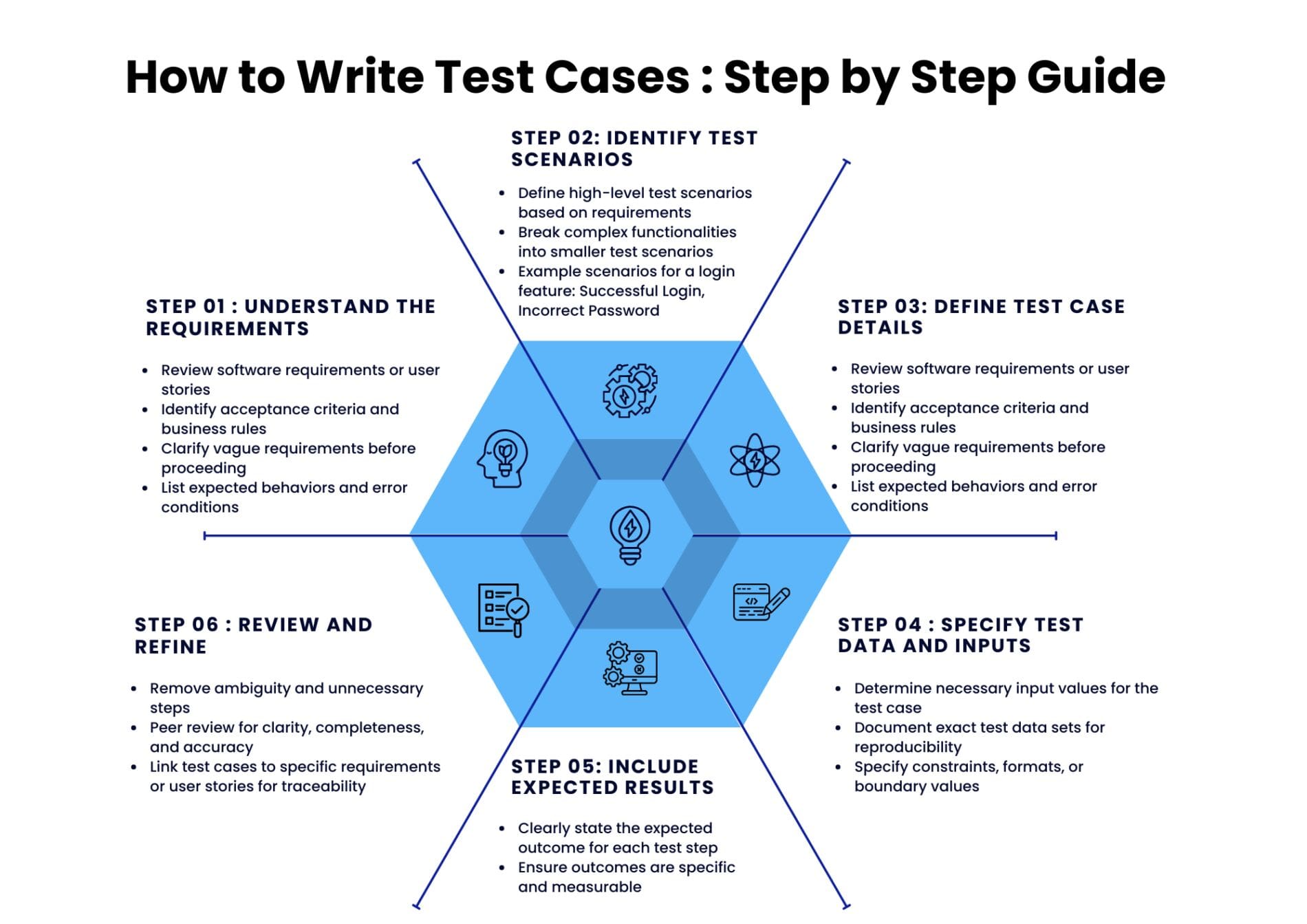

How to Write Software Test Cases: Step-by-Step Guide

Writing a test case is a systematic process. You can follow these step-by-step guidelines to make sure no important details are overlooked.

This section combines practical how-to steps with high-level considerations at each stage:

1. Understand the Requirements

Start by thoroughly reviewing the software requirements or user stories related to the feature you want to test.

You need a clear understanding of what the software is supposed to do before you can test it. Identify the acceptance criteria and any business rules.

If the requirements are vague, seek clarification now. First, identify what needs to be tested: this is the key to writing effective test cases.

Consider making a list of behaviors or conditions the feature should handle, including error conditions. These will turn into your test scenarios and cases.

2. Identify Test Scenarios

Based on the requirements, list out the test scenarios (or high-level situations) you need to validate.

A test scenario is a user story or use-case thread for which you will create one or more test cases.

For example, if you are testing a login feature, you might consider the following scenarios:

- Successful Login: Verify that a registered user can log in with valid credentials.

- Login with Incorrect Password: Test the login process with a registered username but an incorrect password.

- Login with Empty Fields: Attempt to log in without entering any credentials.

- Password Reset Flow: Verify the “Forgot Password” functionality.

Each scenario will likely translate to one or more test cases covering different inputs or paths.

Break down complex functionalities into smaller scenarios so that each is easier to test. This step ensures you have coverage for all expected behaviors and edge cases.

3. Define Test Case Details

For each scenario, you should write the individual test case. Start by giving the test case a unique ID and a descriptive title.

Next, write a brief description/objective for the test case, including one sentence explaining its purpose.

Then, list any preconditions required (e.g., user must be registered, or initial state of the system).

Now, outline the test steps in the exact sequence you need to execute. You should always translate the test scenario into concrete actions by writing detailed steps and conditions.

4. Specify Test Data and Inputs

Identify the data values or inputs needed to execute the test case. This may be partly done in the previous step, but it’s worth double-checking.

For instance, if a test step is “Enter a valid email and password,” determine what email/password will be used (e.g., [email protected] / Pa$$w0rd).

Reproducibility requires that test data is prepared and noted in the case. Specify the exact email and password to be used in the test case to avoid any ambiguity.

Additionally, if there are multiple sets of test data, list them clearly. For example:

- Test Data Set 1:

- Email: [email protected]

- Password: Pa$$w0rd1

- Test Data Set 2:

- Email: [email protected]

- Password: Pa$$w0rd2

If the test data needs to meet certain criteria (like an email format or a specific range of values), document those criteria.

5. Include Expected Results

After the steps, write down the expected result for the test. This is a critical part of the test case. It should state exactly what you expect to see after performing the steps with the given data.

For example: “Expected Result: The system displays a welcome message with the user’s name and navigates to the dashboard screen.”

You have to be as specific as possible. If the test case involves multiple verification points, note the expected outcomes for those specific steps as well.

6. Review and Refine the Test Case

Once you’ve drafted the test case, take a moment to review it thoroughly. Ensure you have made the test case clear, correct, and concise.

Remove any ambiguous language or unnecessary steps. Verify that you have specified all preconditions and test data.

A peer or another QA team member reviewing the test case is also a good practice. A fresh set of eyes can catch omissions or confusing instructions.

During the review, consider if you can make the test case more reusable or generalized for similar scenarios.

Finally, link the test case to the specific requirement or user story it covers to ensure traceability. This helps you track the relationship between test cases and their validation requirements.

Test Case Templates

Test case templates are essential tools for planning, executing, and tracking test cases. They organize the testing process by capturing all necessary information.

Below are different types of test case templates designed to meet various testing needs.

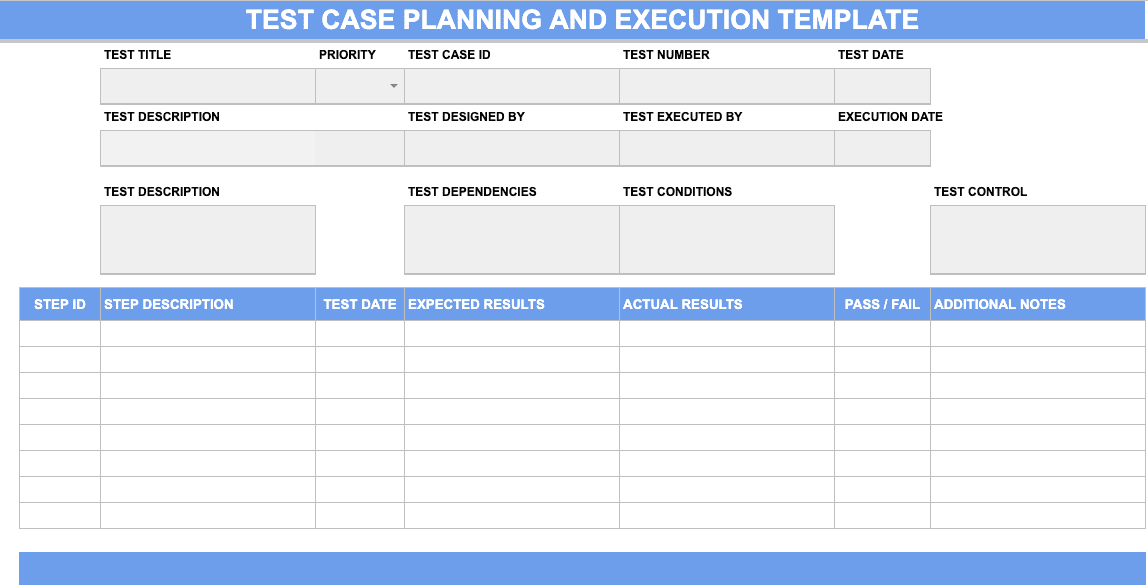

Test Case Planning and Execution Template

This template captures all major details of test scenarios, including Test Case ID, description, prerequisites, execution date, expected and actual results, and additional notes.

It ensures comprehensive tracking and can be used for any type of test.

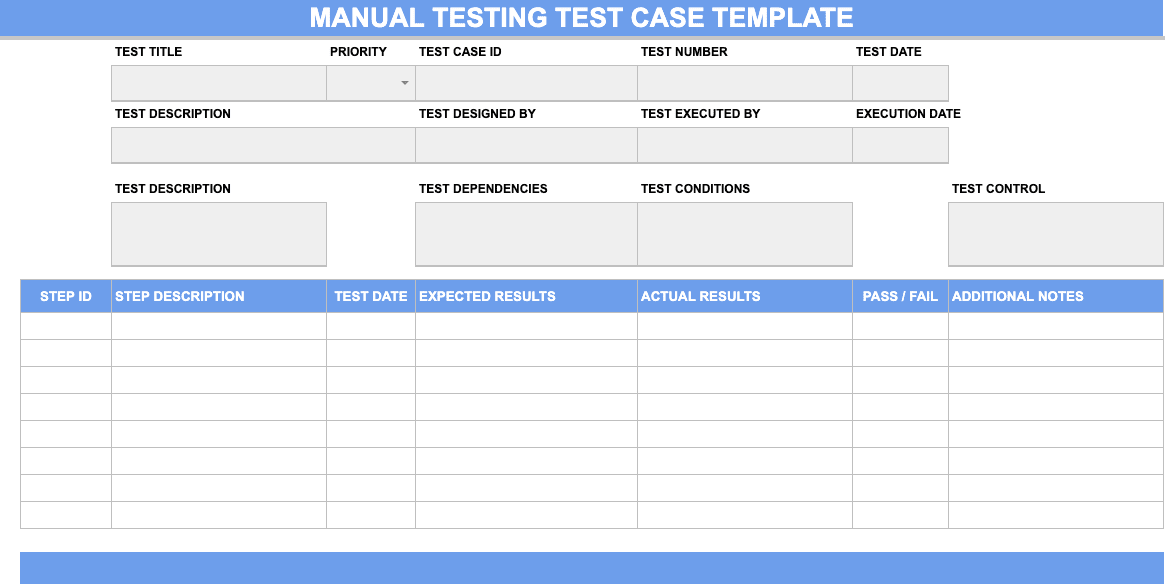

Manual Testing Test Case Template

This template documents test cases with IDs, descriptions, execution dates, priorities, and dependencies.

It helps analyze expected versus actual results to determine if the test case passed or failed, facilitating detailed inspection.

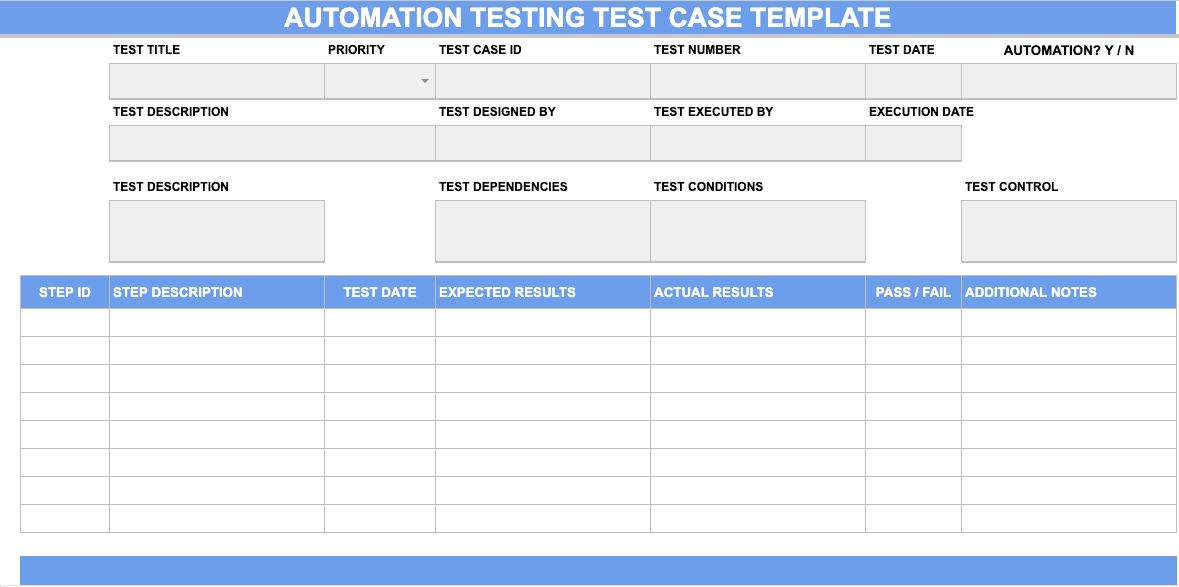

Automation Testing Test Case Template

This template analyzes automated test cases, including Test Case ID, description, execution date, and test duration.

It helps analyze the health of the automation process and identify bottlenecks. It also indicates whether the test case is automated (Yes/No).

Types of Test Cases

There are various types of test cases, each targeting a different aspect of the application. The following are some common types of test cases and tips on how to write them effectively:

Functional Test Cases

Functional tests verify that the software’s features and functions work according to specifications. They are the most common type of test and usually the first to be written.

You should have test cases for every functionality described in the requirements, including the expected outputs for given inputs.

Write them in a straightforward action -> expected result format. For example, for a calculator app, functional tests would include:

- Adding two numbers: Clearly defined steps and expected outcomes.

- Division by zero handling: Steps and outcomes for this edge case.

You should consider both the normal cases and the edge cases for each function.

User Interface (UI) Test Cases

UI test cases focus on the visual and interactive elements of the software, i.e, what the user sees and interacts with.

They verify aspects like layout, labels, input fields, buttons, and user navigation flows. For example, a UI test case might be:

- Verify that clicking the ‘Submit’ button on the registration form highlights any empty required fields in red and displays an error message.

Steps would include entering data, clicking buttons, etc., and expected results would describe UI changes.

Performance Test Cases

Performance test cases verify that the software meets performance criteria, such as response time, throughput, and resource usage under certain conditions.

They often outline scenarios for load or stress testing rather than step-by-step user actions. Define the scenario and the metric to measure.

For example, a performance test case might be:

- Verify that the system can handle 1000 concurrent users with an average response time

The expected result should state the performance criteria.

Integration Test Cases

Integration test cases verify that different modules or services in the software work together properly. They ensure that the interfaces between components do not have issues.

For example, a test case could be:

- Verify that after the order is placed on the website, the order details are correctly saved in the database, and an email confirmation is sent.

You should include details for every part of the integration as well as the expected results for all components involved.

User Acceptance Test (UAT) Cases

UAT test cases are high-level scenarios from an end-user’s perspective to ensure the software meets business requirements and is acceptable to the client or end user.

They focus on real-world use cases and system behavior in business terms. Cover end-to-end flows a typical user would perform. For example:

- For purchasing a product using a credit card, steps include searching for a product, adding it to the cart, checking out, making a payment, and receiving an order confirmation.

You will need to describe actions as business terms like “A user submits an order” and expected results as “The order has been placed successfully, and an order confirmation number has been generated.”

Ensure each UAT test case traces back to a high-level requirement or use case, covering main success scenarios and some alternate flows.

Specific Test Case Writing Techniques

Beyond the basic types of test cases, there are different techniques you can use based on your project’s needs and testing approach.

These techniques help you structure test cases for various situations. Here are some notable test case writing techniques and how to use them:

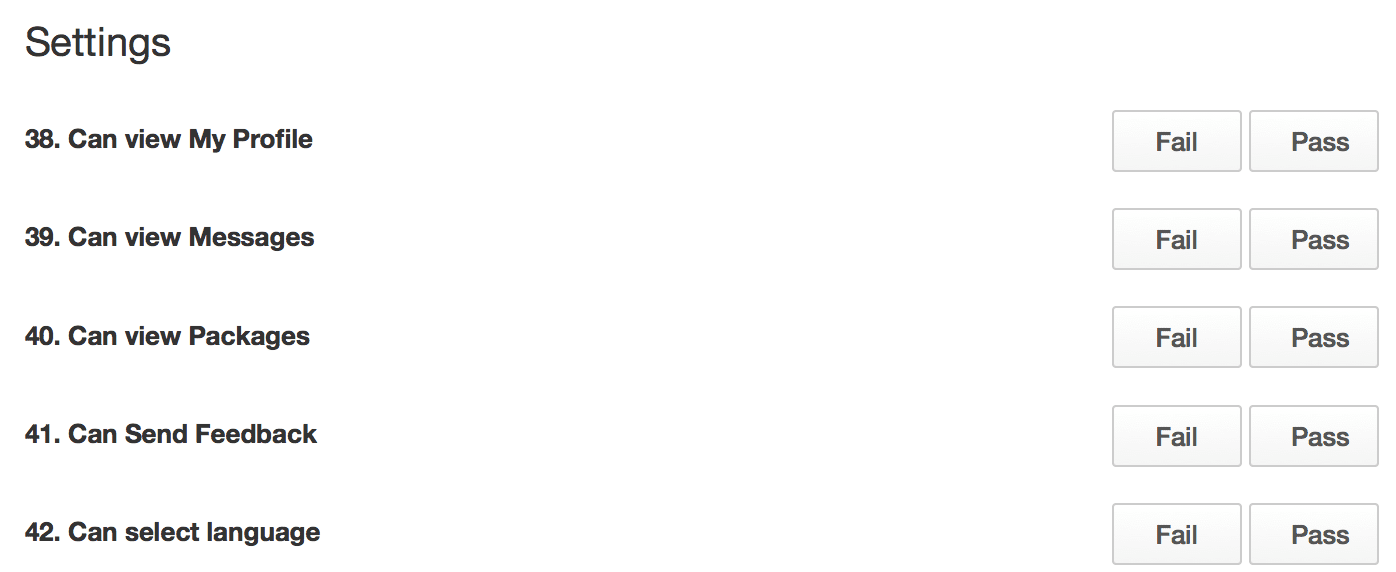

Structured Exploratory Test Cases

This technique combines exploratory testing with minimal structure to ensure coverage. Instead of detailed steps, create high-level tasks for testers to explore the app.

Here is how you can write them:

- Break the app into features or areas.

- List tasks or goals for each area (e.g., “Explore sending messages between two users, including attachments”).

Don’t script exact steps; just state the objective. Testers explore and mark it as pass or fail based on functionality.

This method helps find unexpected issues while keeping track of what was tested. It’s ideal for agile environments and early prototypes, often called “session-based test charters.”

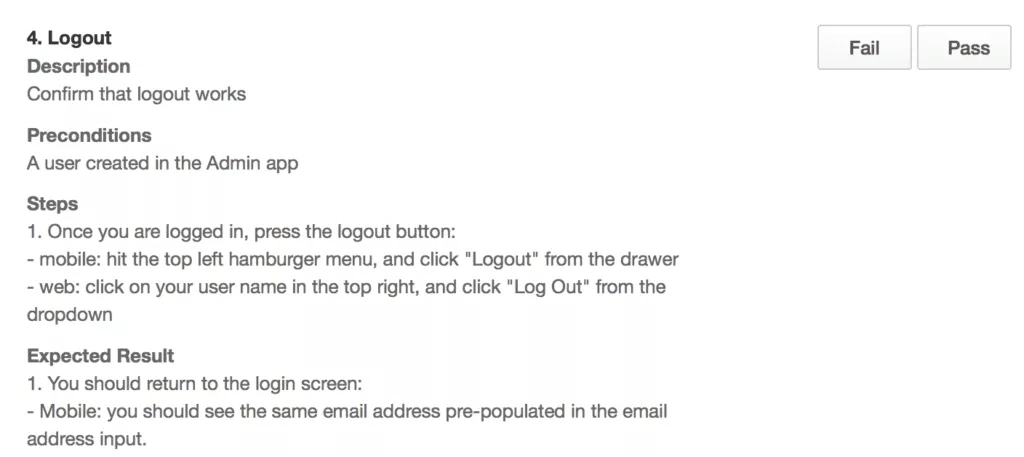

Classic Test Cases

The traditional test case format (with ID, preconditions, steps, expected results, etc.) can be called “classic” test cases. They are detail-heavy and leave little to interpretation.

Here is how you can write them:

- Follow the standard test case template for each scenario.

- Use this method for critical functionality that needs exact reproducibility and for handing off tests to less experienced testers.

- Keep each test case focused (try not to exceed 10-15 steps).

- Use action verbs and precise language in descriptions and steps.

Classic test cases are great for regression suites or formal testing phases requiring audit trails. Don’t add unnecessary steps or information that won’t help you execute the test.

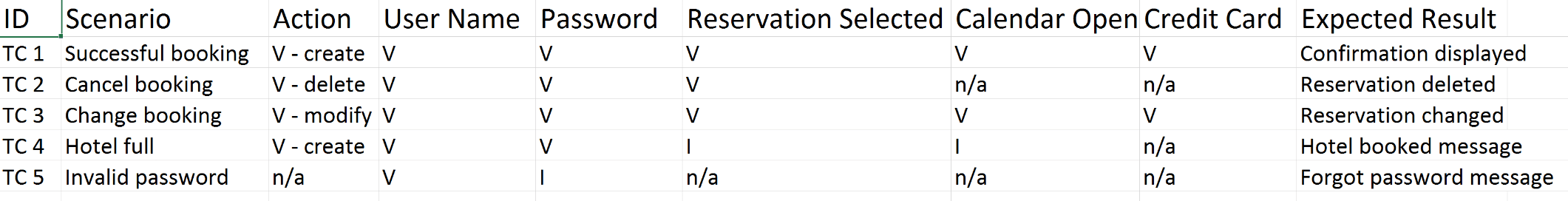

Valid/Invalid Test Cases

- Identify a function with multiple input conditions (e.g., a form with several fields).

- Create a table where each row is a test scenario, and columns represent inputs or conditions (labeled as “Valid” or “Invalid”).

For example, for a login form:

- “Valid Email, Valid Password -> Expected: Login successful”

- “Valid Email, Invalid Password -> Expected: Error message”

- “Invalid Email, Valid Password -> Expected: Error message”

The matrix approach is compact and ensures that all combinations are tested. It is useful for combinatorial testing, such as checking each field’s validation without having to write separate test cases.

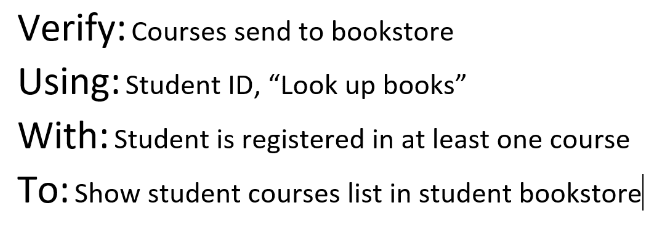

“Verify-Using-With-To” Test Cases

This format for writing test case descriptions clearly states the action, method, context, and expected result using the keywords Verify, Using, With, To.

Here is how you can write them:

- Start with “Verify” – what are you verifying?

- After “using”, describe the tool or input being used.

- After “with”, describe the conditions or context.

- After “to”, describe the expected result or goal.

For example: “Verify logging in using valid credentials with an existing user account to see the dashboard.”

This style helps communicate test cases at a high level without detailed steps. It can be used as both the title and description of a test case, ensuring consistency and clarity.

Testable Use Cases

Use cases describe how users interact with the system to achieve a goal, usually written by business analysts or product owners.

They are often narrative and not immediately in a form that’s testable. However, you can convert use cases into test cases by extracting the flows and making them more specific.

Here is how you can write them:

- Take a use case scenario (e.g., “User books a flight ticket”).

- Identify the main flow and alternate flows.

- Write a test case for each flow: the main success path becomes one test case, and alternate paths or error conditions become separate test cases.

- Maintain the user’s perspective but add specifics needed for testing.

- Keep the language technology-neutral (focus on what the user does and what the system does in response).

For example, a use case might say, “User enters payment details and the system validates payment”. A test case should specify what “payment details” means and the expected result of “validates payment”.

Essentially, turn each step of the use case into something verifiable.

Also, consider postconditions as part of the expected results. Split complex use cases into multiple smaller test cases focusing on particular sub-goals.

Best Practices for Writing Effective Test Cases

Writing test cases isn’t just about following a template; it’s about following best practices to improve their quality and usability.

Here are some tips to keep in mind:

Clarity and Conciseness

You should write test cases in a way that anyone can understand and execute them, including new team members. Your sentences should be simple and short.

Each step should have only one possible interpretation. If a test step is getting too long or complex, break it into smaller steps.

Also, keep your test case descriptions focused; don’t try to test multiple unrelated things in one test case.

You should review your test case by reading it aloud or imagining you are the tester without prior knowledge.

Coverage and Completeness

You should ensure your set of test cases covers all functional and non-functional requirements of the software.

This means writing test cases for every acceptance criterion, user story, and important use case, including edge cases and error conditions.

Aim for high test coverage – while 100% coverage of all possible scenarios may not be feasible, you should cover as much as reasonably possible. One way to check coverage is to map test cases to requirements using a traceability matrix.

Consistency (Formatting & Naming Conventions)

You should keep a consistent format for all test cases. Use a standardized template with the same fields in the same order for each test case, and follow uniform conventions for naming and numbering.

For example, decide on a format for Test Case IDs (like LOGIN_001, LOGIN_002) and use it throughout.

Similarly, use consistent terminology. For example, don’t call it “Expected Result” in one place and “Expected Outcome” in another.

If multiple testers are writing test cases, agree on guidelines for writing steps and denoting variable data. A cohesive test suite looks professional and is simpler to navigate.

Reusability

You should write test cases that can be reused in different contexts or for regression testing whenever possible.

Iif two features share a common sub-flow, write a generic test case for that sub-flow and reference it, rather than writing it twice.

For example, if multiple tests require a user to be logged in first, create a reusable test case for “Login” that other test cases can call upon.

Furthermore, design test steps modularly so that one test’s steps can be easily copied or adapted for another similar test.

Use the DRY (Don’t Repeat Yourself) principle in testing: if you find yourself writing the same steps or conditions in multiple test cases, reorganize them.

Linking Test Cases to Requirements

Traceability is crucial in test management. Each test case should link back to one or more requirements and vice versa.

This ensures that all requirements are tested and that you know exactly which test cases to update if a requirement changes.

Try to maintain a Requirements Traceability Matrix (RTM) that maps requirement IDs to test case IDs. When writing a test case, include the requirement ID or user story it covers in the test case documentation.

This practice helps during test planning and maintenance and provides visibility to stakeholders, showing which tests cover which business needs.

Good traceability ensures that nothing critical is untested and makes it easy to identify impacted tests when requirements change.

Regular Updates and Maintenance

You should maintain test cases as the project evolves. Features might change, UIs might be redesigned, or requirements might be added or removed, so test cases must be updated accordingly.

Every time you complete a development iteration or sprint, review your test cases to ensure they remain current and valid.

If a test case is no longer applicable, archive or delete it to avoid confusion. If a workflow changes, update the steps and expected results.

Regular maintenance also involves improving test cases when you notice shortcomings. Whenever you find gaps in test cases, write new test cases to cover that scenario in the future.

Final Thoughts

A well-written test case makes all the difference in software quality. They help testers catch defects early, ensure consistent testing, and prevent costly production failures.

A well-defined test plan, accurate test data, and well-defined expected results contribute to efficient and repeatable testing. Without structured test cases, teams risk missing critical bugs, wasting time on unclear steps, and struggling with inconsistent testing.

Furthermore, updating test cases as software evolves prevents outdated tests from slowing teams down. A well-maintained test suite facilitates future testing and supports faster, more confident releases.

However, managing test cases at scale requires the right tools and expertise. That’s where Testlio can help.

With a network of expert testers and a powerful testing platform, Testlio enables teams to write, execute, and manage test cases efficiently, ensuring that every critical aspect of your application is tested.

Want to improve your test case strategy? Partner with Testlio and build better software with efficient testing.