Rethinking Crowdsourced Testing: Why Leaders Are Leaving the Gig Model Behind

If you are building or scaling digital products, chances are your QA process already includes gig testers. You post a task, someone across the globe picks it up, files a bug, and moves on.

Platforms like Test IO, Fiverr, and Upwork have made this model easy to adopt. It is fast, flexible, and affordable. That is exactly why so many teams default to it.

And adoption is rising fast. According to recent market analysis, the global crowdsourced testing market was valued at USD 1.8 billion in 2023 and is expected to reach USD 4.39 billion by 2032, growing at a 10.4% compound annual rate.

That growth reflects how heavily product teams have leaned into gig-style testing. But here is the problem. Volume does not equal value.

Most gig testers lack product context, and there is no continuity or accountability. The result is a flood of shallow bug reports that slow down engineering instead of helping it. Teams spend more time sorting through noise than solving real problems.

This is the real trade-off between fast tester access and meaningful results. Gig testing gives you the first. However, as product complexity grows and risk increases, it becomes clear that it cannot deliver the second.

That is where managed crowdsourced testing comes in. It brings structure, consistency, and product knowledge to the same global talent pool.

This article explores why gig testing often falls short for serious, high-stakes products and how managed crowdsourced QA provides the structure, consistency, and scale that modern teams need to deliver with confidence.

Why Gig Testing Took Off

The rise of gig testing was not an accident. It solved a real pain point for fast-moving product teams: how to get wide device coverage and quick feedback without hiring a QA department.

You could spin up a test cycle in a few hours. Pay only for valid bugs. Get dozens of testers across devices and regions without worrying about contracts, training, or long onboarding cycles.

The gig testing model became the default play for startups and e-commerce sites that must launch fast.

The pay-per-bug model also made it budget-friendly. You did not pay for idle time. You paid for output. Cost control made gig testing an easy sell in boardrooms and budget reviews.

It often did the job for exploratory testing, localization checks, or basic visual sweeps, especially when the alternative was no testing at all.

But as products mature, complexity and expectations around quality, reliability, and user experience grow. That is where the cracks in the gig model start to show.

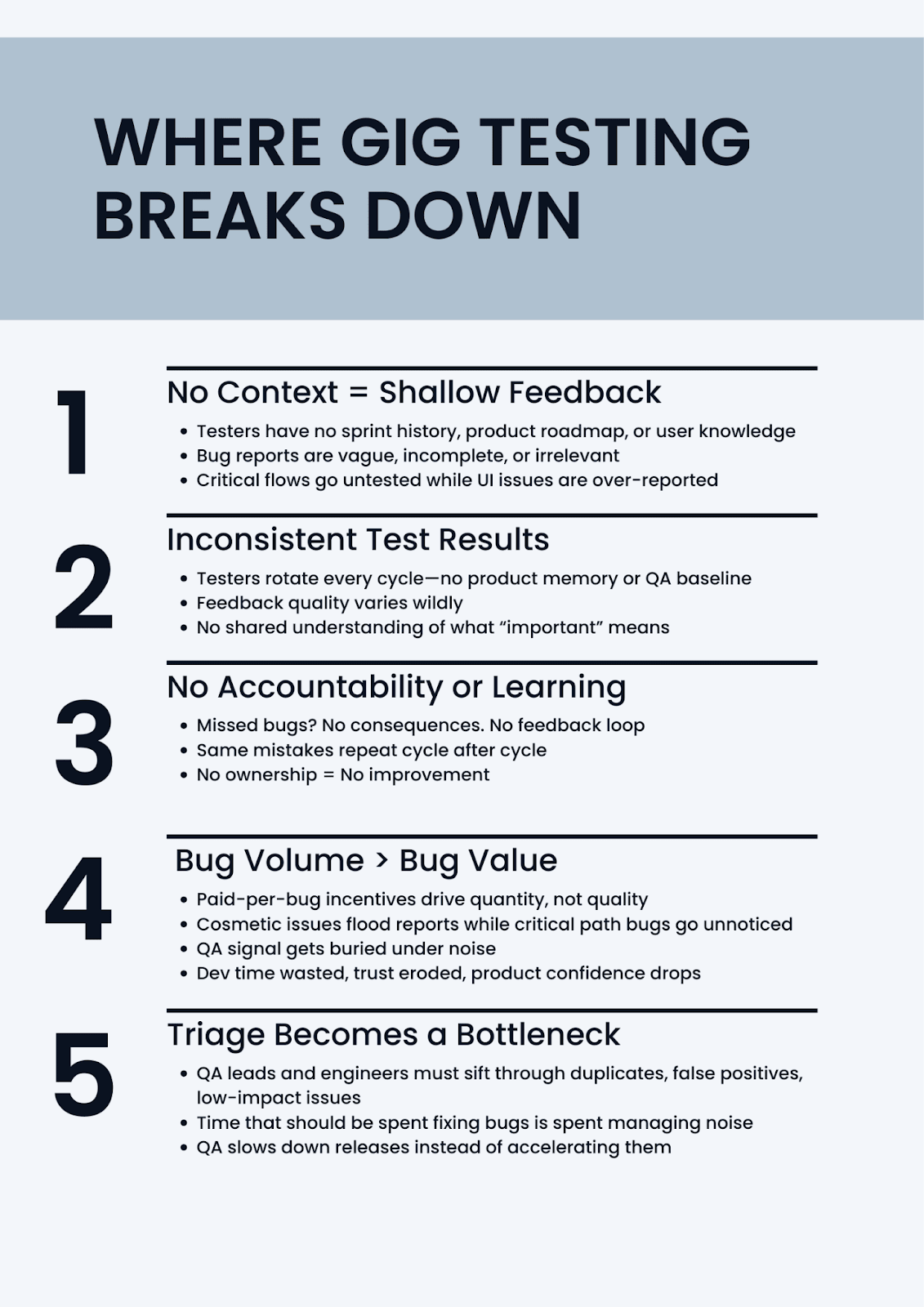

Where Gig Testing Breaks Down

Gig testing delivers on speed and reach. But cracks start to show once teams move beyond quick UI checks or MVP launches.

The model isn’t built for accountability or continuity. It delivers bugs, but rarely insight. Here are some examples of where gig testing falls short:

No Context Means Shallow Feedback

Most gig testers are strangers to your product. They haven’t followed the sprint planning. They don’t know your users or business priorities. Every cycle is a cold start.

That lack of context shows up in the reports. You get generic bug descriptions, incomplete reproduction steps, and zero awareness of what matters to your team.

Testers might flag a misaligned icon, but miss that your onboarding flow is broken on iOS 17. You are not getting quality assurance. You are getting task completion.

Inconsistency from Cycle to Cycle

Since testers rotate constantly, your QA results vary wildly. One cycle may be full of actionable bugs, while the next could be full of unusable noise.

There is no institutional memory, product intuition, or shared standard for what counts as “important.”

This inconsistency creates a major problem for engineering leaders. Without a reliable signal, you cannot track quality trends or understand what is improving over time.

Testing becomes reactive instead of strategic.

Low Accountability, No Feedback Loop

If a tester misses something critical, nothing happens. No one investigates why it was missed, and no one adjusts the process. The next cycle rolls in with a different set of testers, and the same issues repeat.

You cannot run a high-quality product organization with that kind of setup. Accountability is essential. Otherwise, bugs keep slipping through, and no one owns the outcome.

Bug Volume Over Bug Value

On most gig platforms, testers are paid per bug, which encourages volume. The more they submit, the more they earn. However, that incentive model works against product teams trying to reduce noise.

You end up with dozens of reports about typos, spacing, or obscure edge cases that will never affect real users. The signal-to-noise ratio collapses.

Logins, payments, onboarding, and other critical path flows get buried under unnecessary noise. Developers lose time, QA leads lose visibility, and users lose trust.

Triage Becomes a Full-Time Job

Someone still has to review every bug, verify it, prioritize it, and send it to the right team. That someone is usually your QA lead or your developers.

Instead of accelerating releases, gig testing often slows them down. Engineering teams get overloaded with unclear reports, duplicates, and low-priority findings.

The handoff between QA and development becomes a bottleneck, not a bridge.

What Managed Crowdsourced Testing Does Differently

Gig testing throws people at the problem. Managed crowdsourced testing builds a process around it.

Testing differs not just in who does it, but also in how it is done. Managed crowdsourced QA platforms still give you access to a global crowd, but that crowd is curated, coached, and coordinated.

You are not buying labor. You are partnering with a team that understands your product. Let’s discuss what managed crowdsourced testing does differently.

Vetted Testers Who Know Your Domain

Managed crowdsourced QA providers do not accept just anyone. Testers are screened, trained, and often aligned with specific domains like fintech, healthcare, or e-commerce.

You are not assigning tasks to random freelancers. You are working with professionals who know what a broken 3DS redirect means or why a minor UI change could violate accessibility standards.

That domain knowledge changes everything. It produces bug reports that go beyond “button does not work” and into “the button triggers an error when the Stripe SCA flow is interrupted after step 2.” That kind of precision saves hours for your triage and dev teams.

Instead of sending tasks into the void, managed crowdsourced testing teams operate under a defined test plan. You have a test lead and a schedule.

You have coverage areas and regression matrices. This structure gives you predictable output, not surprise feedback dumped in a Slack channel at midnight.

Dedicated leads also ensure knowledge carryover from one cycle to the next. They know your product roadmap, how your components interact, and where past bugs have occurred.

This context leads to more intelligent testing and better issue detection over time.

Continuity That Compounds Product Understanding

In a managed model, testers often stay with your product for multiple cycles. They build familiarity with your workflows, users, and edge cases. That continuity leads to better bugs.

Instead of basic UI complaints, you start getting bugs that reveal hidden logic issues, regression risks, or user experience gaps you had not considered.

Managed testers can flag invisible issues to one-time gig testers because they understand how a feature should work.

This level of feedback does not just help QA. It helps product and engineering teams make better decisions, faster.

Real-World Use in High-Risk Industries

Managed crowdsourced QA is not theoretical. It is being used today in sectors where failure is not an option.

- Fintech platforms rely on managed testers to validate KYC flows, multi-factor authentication, and edge-case payment handling.

- Healthcare apps use structured QA to verify patient onboarding, consent management, and secure data workflows.

- E-commerce teams depend on it for complex checkout scenarios, cross-device behavior, and accessibility compliance.

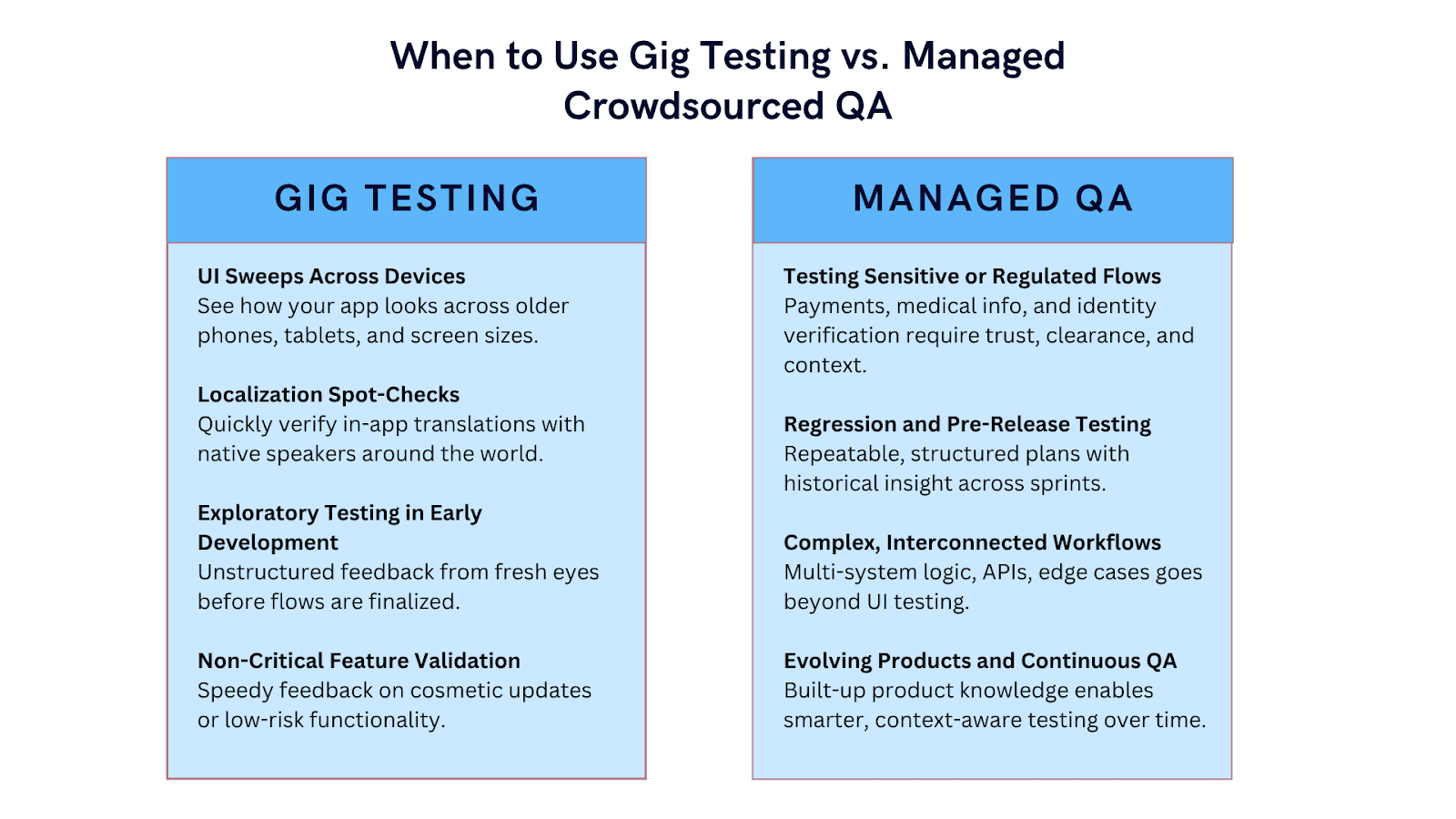

Use Case Breakdown

Gig testing is not inherently bad. It is just built for a different kind of problem.

If your product is in early development or you need quick feedback across dozens of devices, it can deliver value.

But once your product has real users, revenue, or regulatory risk, the quality bar goes way up. Here’s where each model makes sense, and one outperforms the other.

When Gig Testing Makes Sense

Gig testers are best used for quick, lightweight validation tasks. If you launch early or test surface-level functionality, the speed and global device access can help.

Common gig-use cases:

- UI Sweeps Across Devices: Covers visual checks across a wide range of real devices, including older models and uncommon screen sizes.

- Localization and Language Spot-Checks: Provides quick validation of in-app translations and layout issues across different regions and languages.

- Exploratory Testing in Early Development: Surfaces usability issues and functional blockers during early product stages, before formal test cases are in place.

- Validation of Non-Critical Features: Offers fast feedback for minor updates, cosmetic changes, or low-impact feature rollouts where structured QA is unnecessary.

When Managed Crowdsourced QA Is Non-Negotiable

Structure is essential when dealing with sensitive data, user trust, or complex product logic. Managed crowdsourced QA delivers depth, continuity, and accountability.

Scenarios that demand managed QA:

- Testing flows involving sensitive data or compliance risks: This includes anything related to payments, medical information, or personal identity. Gig testers do not have the context or clearance for this kind of work.

- Regression and pre-release testing: Every release introduces risk. Managed teams follow structured plans, keep historical bug records, and know where to look based on previous cycles.

- Complex, multi-step user flows: If your product spans multiple systems, platforms, or conditions, you need testers who understand logic, state management, and dependencies, not just visuals.

- Products that evolve over time: Long-term QA partners build product knowledge and test smarter with every cycle. Gig models reset every time. Managed models improve every time.

More Testers ≠ Better Quality

When teams scale, they often assume that more testers mean better coverage. But more labor without structure just creates more noise.

Bug reports pile up, triage slows down, and engineering teams lose focus. The QA process becomes bloated instead of better.

Gig testing plays into this illusion. It promises flexibility and volume but lacks continuity, domain expertise, and accountability in practice.

Managed crowdsourced testing flips that model. It brings structure to scale. Instead of cycling through freelancers, you work with dedicated test leads, vetted testers, and a team that builds product knowledge over time.

They do not just file bugs. They understand how your users behave, what your business cares about, and how your releases evolve.

This is what platforms like Testlio are built for. Testlio’s managed crowdsourced QA model gives product teams access to a global network of expert testers, matched by domain, guided by strategy, and supported by dedicated leads.

Whether testing complex payment flows, securing healthcare data, or preparing for a global launch, Testlio managed QA helps you get beyond bug counts and toward actual product confidence. Talk to a member of our team to learn more.