AI testing

De-risk every AI release before it goes live

Reduce hallucinations, biases, and vulnerabilities in your AI-powered apps and features. Testlio’s managed crowdsourced model embeds vetted experts into your AI testing workflows so you can deliver safe, reliable experiences for every user, in every market.

Test global releases with real-world complexities

150+

countries

100+

languages

600k+

devices

Your AI’s performance defines your product experience

When AI gets it wrong, it can mislead users, show bias, or create unsafe outputs that harm your brand. Testlio’s crowdsourced AI testing adds the scale, expertise, and real-world diversity your internal teams need to uncover hidden issues before release.

Protect velocity

Detect and fix critical AI issues without slowing releases or burning out your team.

Mitigate risk

Validate your system for hallucinations, bias, outdated or toxic outputs, and model drift over time.

Stay compliant

Meet global regulations, privacy standards, and ethical guidelines in key target markets.

Launch confidently

Ensure consistent performance across languages, cultures, and user contexts.

Test at scale

Simulate adversarial prompts, misuse scenarios, and multi-agent interactions in real conditions.

Everything your AI needs to perform everywhere

We don’t just check if your AI works. We validate how it behaves. From core functionality to those rare edge cases that only appear in the real world, Testlio brings a human-in-the-loop approach to AI testing to ensure your product wins in every market.

Our AI testing services include:

Red teaming

Simulate adversarial scenarios, harmful prompts, and misuse cases to identify and mitigate potential vulnerabilities proactively.

Bias testing

Identify and mitigate any pre-existing biases that may impact the accuracy and objectivity of the app’s output.

Stability testing

Test your model’s ability to handle unexpected or unusual inputs and identify any weaknesses that could lead to hallucination.

Context retention

Verify the ability to understand the user’s intent by maintaining context and using information earlier in the conversation.

Generative AI testing

Evaluate AI-generated outputs for accuracy, factual grounding, functional correctness, style/tone consistency, safety, and cultural fit.

RAG testing

Validate ingestion pipelines, retrieval relevance, grounding accuracy, and source freshness in retrieval-augmented generation (RAG) systems.

AI agents & MCP server testing

Evaluate planning, action execution, and coordination in single- or multi-agent systems across and beyond Model Context Protocol (MCP) environments.

Predictive model testing

Verify accuracy, fairness, and explainability in models making predictions or automated decisions, regardless of data type.

Recommender testing

Validate diversity, novelty, and fairness in recommendation algorithms to prevent filter bubbles and ensure balanced exposure.

Experts who know how AI fails

Our testers understand prompt manipulation, multi-agent coordination, and the unpredictable ways AI can behave. They replicate real-world conditions, challenge assumptions, and push your models to their limits so you can launch with confidence across markets.

From AI to every customer touchpoint

Validate the broader product experience across devices, regions, platforms, and languages. Our testers validate accessibility, localization, regression, usability, and more to ensure your product works everywhere your customers need it to.

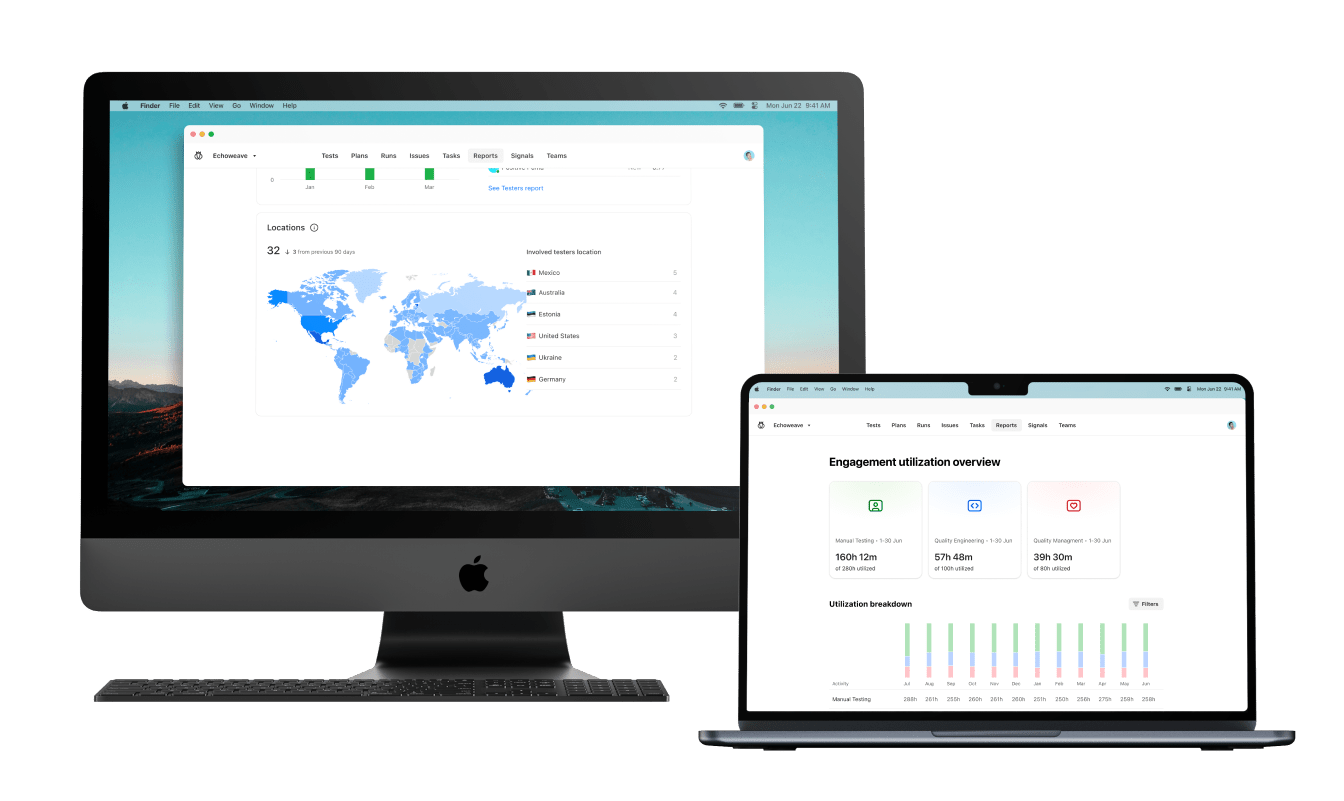

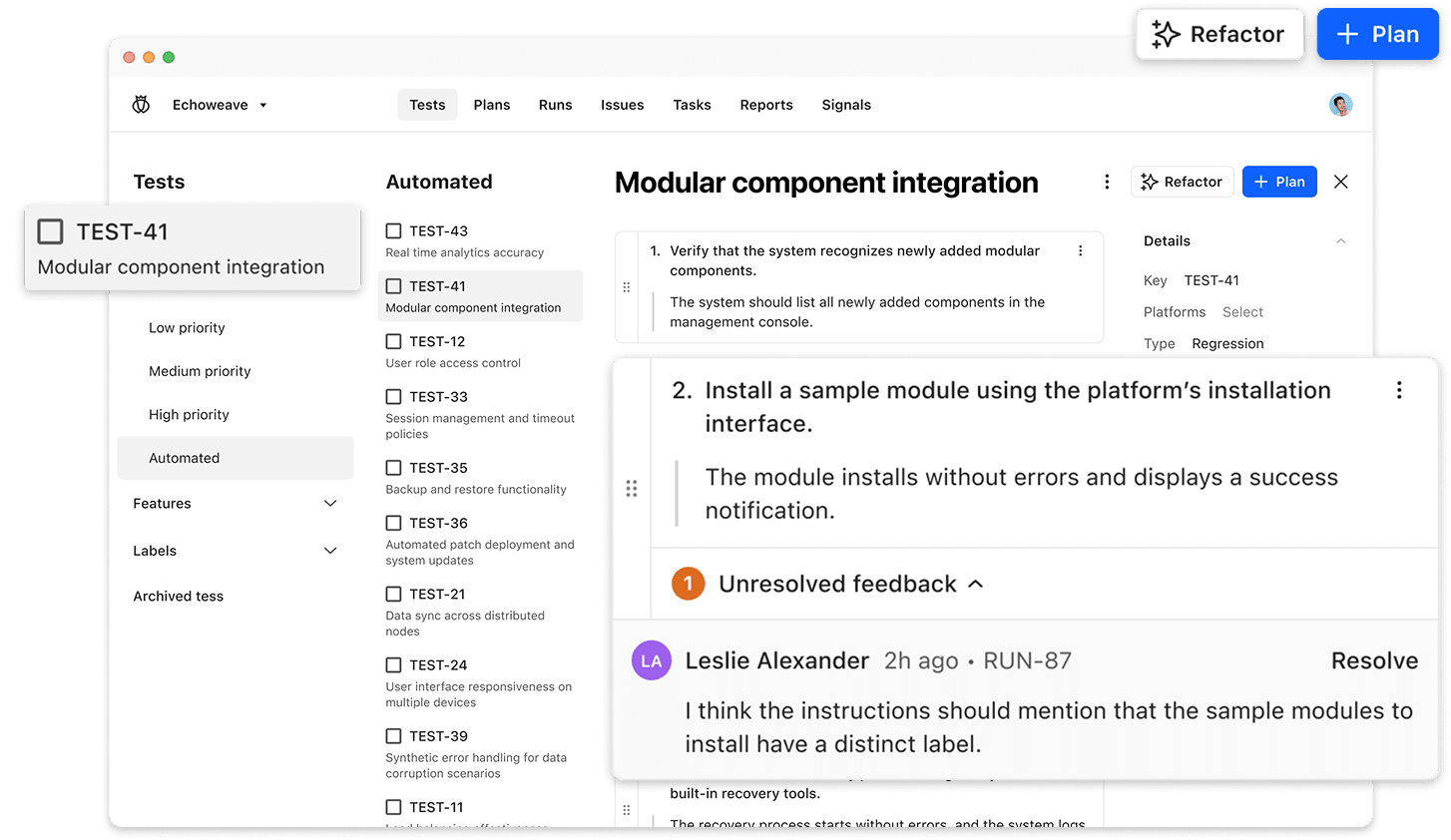

A platform designed for quality at scale

Powered by LeoAI Engine™, Testlio’s platform brings intelligence to every stage of AI QA. It automatically matches the right testers, orchestrates the entire testing workflow, and surfaces insights in real time. You see exactly who tested what, where issues occurred, and why. With built-in integrations like Jira and TestRail, your teams move faster while staying fully in control.

Real-world AI validation without trade-offs

Get the scale of crowdsourced testing with the control needed for high-stakes AI releases. Testlio embeds domain specialists in real-world settings to uncover weaknesses, validate fixes, and ensure your models work everywhere you launch.

End-to-end pipeline integration

We plug into your pre- and post-release delivery workflows to run regression packs, behavioral checks, and continuous drift monitoring.

Human-in-the-Loop (HITL) testing

Internal teams design test strategies, rubrics, and adversarial scenarios, while our community delivers market-aligned validation across languages, cultures, devices, and contexts.

Documentation-driven execution

Standardized rubrics, red teaming charters, bias/fairness slice definitions, RAG grounding checklists, and agentic plan–act–check scenarios ensure consistent, repeatable testing.

Unmatched domain expertise

Domain experts (e.g., clinicians, financial auditors, legal specialists) co-design test cases and validate outputs to meet strict compliance requirements for regulated industries.

Scale as you grow

Whether you are rolling out new models, adding modalities, or expanding to new regions, we adjust coverage and resources to match your roadmap.

Security and compliance first

We are ISO/IEC 27001:2022 certified and follow strict protocols for testing safely, even with sensitive data and regulated AI workflows.

Case studies and resources

When AI Fails: How to Protect People, Brands, and Trust in 2026

In this session, panelists Jason Arbon, Jonathon Wright, and Hemraj Bedassee, hosted by Summer Weisberg, discuss how teams can build AI testing frameworks you can trust.

The EU AI Act Is Here. Compliance Starts with Testing.

With the EU AI Act now in force, compliance is no longer about aspirational ethics or last-minute checklists, it’s about operationalizing quality assurance at every stage of your AI lifecycle.

Human-in-the-Loop at Scale: The Real Test of Responsible AI

AI failures rarely look like crashes. More often, they appear as confident but incorrect answers, subtle bias, or culturally inappropriate responses.

Ready to ship with speed and absolute confidence?

Frequently Asked Questions

We specialize in managed crowdsourced AI testing. Vetted AI domain experts are embedded into your QA workflows to test in real-world conditions, at scale. We manage everything from scoping to execution to reporting, so your team can reduce risk, maintain release velocity, and confidently ship AI features to every market you serve.

Pricing is based on test type, complexity, and service level. There are no per-seat licenses. You pay for structured execution, vetted testers, platform access, and ongoing client services.

We test LLMs, multimodal models, recommender engines, predictive systems, RAG pipelines, and agentic AI. Our coverage includes bias detection, adversarial prompt testing, hallucination prevention, model drift monitoring, and cultural fit validation.

It depends on product complexity, testing type, and scope. We involve our delivery teams early to define goals, align on architecture and risk, onboard testers, and then begin initial cycles as soon as scoping and onboarding are complete.

Yes, we are ISO/IEC 27001:2022 certified and operate under strict security protocols for regulated industries. Our testers work within secure environments, validating AI models against the EU AI Act, GDPR, and other global regulations. For more information, visit our Trust Center.

We only work with trained and highly vetted QA professionals. For AI engagements, we match testers based on domain expertise, skills and certifications, market familiarity, and language skills to replicate your real user base.

Yes. Our platform integrates with Jira, TestRail, Slack, and other tools you use for AI development and QA. You can manage cycles, track issues, and collaborate without disrupting your workflows.

LeoAI Engine™, the proprietary intelligence layer that powers our platform, orchestrates the entire testing process, from test runs and work opportunities to recruitment, application, and results. By automatically surfacing the best-fit testers for each engagement, streamlining onboarding, and learning from historical project data, it removes friction and enhances precision at every step. For AI QA leads, this means fewer manual tasks, more strategic oversight, and dramatically improved speed and scale.