Volume Testing Explained: A Complete Guide

Volume testing is a type of software performance testing that evaluates a system’s capacity to process massive data volumes within a specific timeframe. It identifies bottlenecks, crashes, or inefficiencies under high data loads, ensuring performance, accuracy, and stability.

In today’s data-centric world, volume testing is crucial for handling large datasets. Systems dealing with large data volumes like financial batch payments or cloud storage must function smoothly, reliably, and accurately.

Inadequate testing can lead to application crashes, data loss, and poor user satisfaction. Volume testing mimics real-world scenarios by submitting a large dataset to the system to measure response time, memory consumption, and data integrity under very high loads.

Comprehensive volume testing enables companies to make software more scalable, increase reliability, and provide a flawless user experience.

What is Volume Testing?

Volume testing is a crucial subset of performance testing that evaluates how a system handles large amounts of data over time. Unlike load testing, which measures system behavior under expected user traffic, or stress testing, which pushes the system beyond its limits to identify breaking points, volume testing specifically examines the impact of high data volumes on performance, stability, and response times.

Example: An e-commerce platform experiences a surge in data storage when processing millions of customer orders during a holiday sale. Volume testing ensures the system can handle this influx without slowdowns or crashes.

There are two key aspects of volume testing:

- Data volume testing: Evaluates how well a system processes and manages massive datasets.

- Transaction volume testing: Assesses system performance under a high number of data on simultaneous transactions.

Why Volume Testing Matters

Volume testing is a critical method of verifying the performance of a system in handling large amounts of data without degradation. It verifies the impact of substantial data volumes on databases, servers, and applications to ensure that central components are stable and effective when loaded.

Testing uncovers performance bottlenecks, variations in response times, and potential failure due to excessive data buildup. This helps organizations identify scalability boundaries, optimize resources, and implement necessary modifications to support smooth operation.

Without volume testing, applications can suffer abrupt slowdowns, data corruption, or crashes when subjected to heavy data surges. By simulating worst-case situations in a test environment, volume testing helps mitigate risks, making applications reliable and efficient in real-world usage.

Moreover, volume testing ensures systems are sustained long-term by revealing vulnerabilities that are not evident under regular operational conditions, allowing companies to maintain high-performance levels. This helps improve customer satisfaction and reduce operational risk caused by information overload.

How Volume Testing Works

Volume testing assesses how well a system performs when dealing with large amounts of data. It involves injecting massive datasets into the system to analyze its efficiency, stability, and reliability under heavy loads.

The goal is to ensure that the system continues to function smoothly without delays, errors, or crashes as data volume increases.

During volume testing, several key factors are measured:

- Response time: This affects how fast the system can respond to large volumes of data. An optimized system should have responsive times even during increasing data loads. Slower response times that significantly impact a system could signify performance problems to be resolved.

- Data integrity: High volumes of data should not compromise accuracy or consistency. The system must maintain all data intact, uncorrupted, and retrievable, even in adverse conditions.

- System behavior: The system’s stability is tested under different loads to see if it continues functioning normally. This helps determine if it slows down, uses up too many resources, encounters errors, or crashes when handling large amounts of data.

- Failure points: Identifying weak points in the system helps prevent problems before they impact users. Volume testing identifies faultlines and assists engineers in rectifying them before deployment.

Testing the system beyond normal operational levels allows testers to evaluate its breaking points and improve its robustness before launch.

Key Aspects of Volume Testing

To make volume testing effective, it has to be properly planned and executed. This means establishing the appropriate environment, preparing test data, and real-time monitoring of system performance.

Below are the important areas that make a successful volume test:

1. Test environment setup

An effective test environment must mirror the actual production environment as closely as possible. This entails having:

- A substantial dataset reflecting actual usage patterns.

- A scalable infrastructure capable of supporting growing data loads.

- Monitoring utilities, such as Nagios and New Relic, to track performance and detect potential issues.

The results can be confusing if the test environment is set up inadequately or incorrectly. For instance, if test hardware is not as powerful as the live production setup, it could inaccurately report system crashes that wouldn’t happen in a live environment.

2. Test data preparation

Having a realistic dataset is critical for valid volume testing. This entails:

- Creating large volumes of database records.

- Sending large API requests to test system responses.

- Simulating user data to test system performance in actual conditions.

As manually generating large datasets takes time, most companies use automation tools such as Apache JMeter or LoadRunner to create and handle test data effectively.

3. Execution and monitoring

Once the system is loaded with data, it must be monitored for performance issues.

- Define test scenarios based on expected data loads and system behavior.

- Execute volume tests incrementally to observe performance trends.

- Track response times, CPU, memory, disk I/O and network usage, and processing speed.

- Identify bottlenecks and system slowdowns under high data loads.

Engineers track response times, CPU usage, memory consumption, disk/IO, network, and storage performance during testing. If the system slows down or crashes, logs are analyzed to pinpoint failures and optimize performance.

By following these steps above, volume testing helps ensure that a system remains stable and efficient, even under heavy data loads.

Volume Testing vs. Load Testing vs. Stress Testing

While volume testing focuses on handling large data volumes, it is often confused with other performance testing types.

Each type serves a distinct purpose, and organizations often use them together to get a comprehensive understanding of system performance.

Here’s how they differ:

| Feature | Load Testing | Stress Testing | Volume Testing |

| Scope | Measures system performance under expected user loads. | Tests system behavior under extreme conditions beyond its normal capacity. | Evaluates system performance when handling large amounts of data. |

| Purpose | Ensures the system can handle expected traffic without slowdowns or failures. | Identifies the breaking point of a system and how it recovers after failure. | Checks how well a system processes, stores, and retrieves massive datasets. |

| Who Performs It? | QA testers and performance engineers. | QA teams and system architects. | QA engineers, database administrators, and system analysts. |

| Automation | Often automated using tools like JMeter, LoadRunner, or Gatling. | Uses automated tools but also requires manual monitoring. | It can be automated but often involves database scripts and bulk data generation. |

| Environment | Conducted in a controlled environment that simulates real-world user traffic. | Requires a production-like environment to assess system limits. | Needs a test setup with large-scale data similar to real-world usage. |

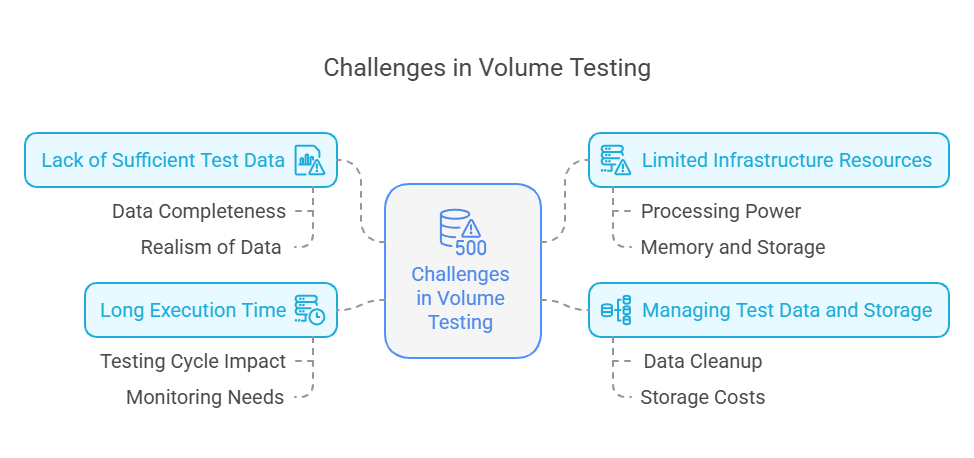

Common Challenges in Volume Testing

Despite its importance, volume testing comes with a few challenges that can affect the accuracy and efficiency of the process.

Insufficient test data

Generating diverse, realistic, and representative data that correctly reflects real-life scenarios is difficult. If the testing data is missing or does not reflect realistic situations, test outcomes may be unable to provide correct insights into system behavior.

Inadequate infrastructure resources

Large amounts of data need a robust testing environment with sufficient processing capacity, memory, and storage. If the infrastructure lacks the scalability to handle such loads dynamically, it can result in incorrect test results and spurious performance problems.

Test data and storage management

Volume testing produces a lot of test data, which must be maintained properly. The data, if not cleaned after testing, may occupy unnecessary space, affect subsequent test cycles, and raise the cost of storage.

Long execution time

Large datasets take time to process, and volume tests may take a long time to execute. This can prolong the testing cycle, affect release timelines, and necessitate constant monitoring for catching problems during runtime.

By overcoming these issues early, teams can enhance the reliability of their volume testing and make their systems sufficiently robust for actual demands.

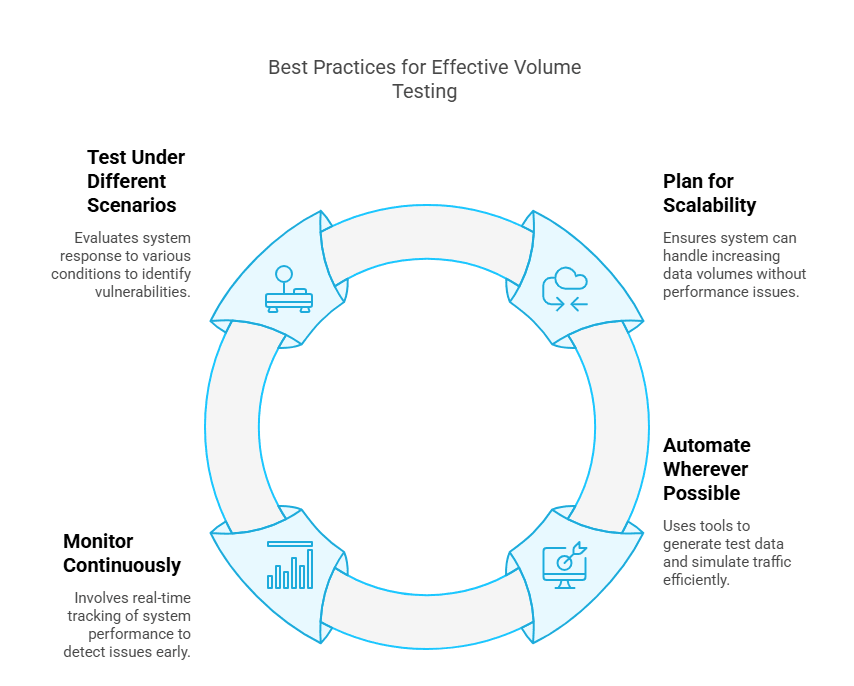

Best Practices for Effective Volume Testing

To make volume testing in software testing more effective, following best practices that ensure accurate results and system stability is essential.

Plan for scalability

A scalable system should be planned so that it can handle increasing amounts of data without compromising performance. Planning for scalability means ensuring the infrastructure can scale dynamically automatically when required.

Cloud solutions are particularly good at this, as they can scale automatically when there is increased demand, preventing slowdowns and crashes.

Automate wherever possible

Manual testing and data creation can take hours and result in mistakes. Automation tools like Mockaroo, DataFactory, DBMonster (OpenSource), or HammerDB help generate test data within seconds, simulating heavy loads and monitoring system responses at all times.

Tools like Apache JMeter and LoadRunner can easily populate databases and simulate API requests, enabling comprehensive testing coverage.

Monitor continuously

Real-time monitoring is vital for detecting performance bottlenecks. Among the various metrics that need to be monitored, response time, server load, memory usage, and database integrity are of major importance.

The ability to constantly monitor them gives the testers early signs of anything going wrong, enabling them to eliminate the bottlenecks and fine-tune performance before deployment.

Test under different scenarios

Software testing under normal conditions is not enough; systems must be tested under various conditions. For example, testers have to check how the system behaves in the face of sudden spikes in user usage, network delay, or database failure. This ensures that vulnerabilities are picked up before they hit users.

By embracing these best practices, teams can improve the reliability of systems and achieve seamless functionality even when handling massive volumes of data.

Real-World Applications of Volume Testing

Volume testing is essential across various industries where high data loads can impact performance, reliability, and user experience. Below are some key sectors that rely on volume testing to ensure seamless operations:

E-commerce sites

Web stores see massive bursts of data flow during promotional activities. Volume testing enables databases, APIs, and servers to handle such bursts without lagging or crashing. Otherwise, shoppers will face slow checkout, failed payments, or website crashes, causing lost business and frustration.

Banking and finance applications

Banks process millions of transactions daily. Volume testing prevents errors such as wrong transactions, delayed transactions, or even system crashes, ensuring smooth financial processing. Moreover, it ensures regulatory compliance by allowing appropriate and uninterrupted transaction processing.

Telecommunication networks

Telecom operators handle large volumes of voice calls, messages, and data traffic, especially during busy hours or occasions. Volume testing ensures networks are stable and do not have call drops, slow internet connections, or outages. Additionally, it helps the operators optimize bandwidth usage, strategize infrastructure utilization, and deliver smooth connectivity to consumers.

Social media platforms

Platforms like Facebook, Instagram, and X process billions of updates, images, and videos daily. Volume testing keeps the operation smooth by simulating high data loads, identifying bottlenecks, optimizing resources, and preventing slowdowns or crashes. Without it, the users will experience delays, failed uploads, or outages, negatively impacting engagement and brand image.

Through volume testing in these industries, businesses can proactively identify system weaknesses, enhance scalability, and deliver uninterrupted services even at high data loads.

How Testlio Can Help You in Volume Testing

Volume testing is just as much about dealing with huge amounts of data as it is about making the system scale, perform, and be reliable. By pre-testing data volumes, companies can avoid surprise failures, improve the user experience, and build confidence in the rock-solid quality of their software.

Need help with your volume testing plan? Testlio is here to assist!

With Testlio, you can:

- Take advantage of automation and real-time monitoring to identify and repair faults before they are presented to end users.

- Connect with seasoned QA experts to successfully conduct volume testing and identify performance bottlenecks.

- Optimize performance by processing high volumes of data without affecting speed, reliability, or functionality.

- Get expert assistance to make your system scalable and robust enough to survive peak loads without crashing.

For convenient performance, minimal downtime, and user satisfaction with high-performance software, contact Testlio today!